November 7, 2016

Return values

This is a tale of two algorithms.

Every now and then, someone publishes a piece of crossover writing that blows me away. Last week, Claudia Lo at Rock Paper Shotgun examined the algorithms behind relationship simulation in the indie game Rimworld by decompiling its code:

The question we're asking is, 'what are the stories that RimWorld is already telling?' Yes, making a game is a lot of work, and maybe these numbers were just thrown in without too much thought as to how they'd influence the game. But what kind of system is being designed, that in order to 'just make it work', you wind up with a system where there will never be bisexual men? Or where all women, across the board, are eight times less likely to initiate romance?

I want to take a moment to admire Lo's writing, which takes a difficult technical subject (bias in object-oriented simulation code) and explains it in a way that's both readable and ultimately damning to its subject. It's a calm dissection of how stereotypes can get encoded (literally!) into entertainment, and even goes out of its way to be generous to the developer. I haven't talked much about games journalism in the last few years, but this is the kind of work I wish I'd thought to do when I was still writing.

In short, Lo notes that the code for Rimworld combines sexuality, gender, age, and emotional modeling in a way that's more than a little unsettling: women in the game are all bisexual and may be attracted to much older partners, while men have a narrower (and notably younger) attraction range. The game also models penalties for rejection, but not for being harassed, which leaves players trying to solve bizarre problems like "attractive lesbians that destroy community morale."

Part of what makes Lo's story interesting is how deeply this particular simulation reflects not just general social biases, but the particular biases of its developer. It took a lot more work to encode a complex, weighted system of asymmetrical attraction than it would to just use the same basic algorithm for everyone. Someone planned this very carefully — in fact, since the developer jumped into the comments on the piece with a pseudoscientific rant about the non-existence of bisexual men, we know that he's thought way too much about it. He's really invested in this view of "how people are," and very upset that anyone would think that it's just the slightest bit unrealistic or ignorant.

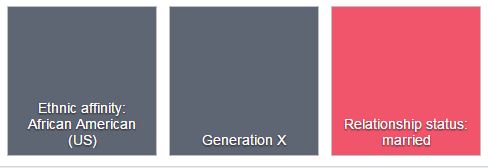

Keep that in mind when reading Pro Publica's investigation into Facebook ad preferences, in which advertisers can target ads toward (or against) particular "ethnic affinities" in violation of federal laws against discrimination in housing and other services. "Affinity" is Facebook's term, a way of hiding behind "we're not actually discriminating on race." Even for the blinkered Silicon Valley, it's astonishing that nobody thought about the ways that this could be misapplied.

The existence of these affinities may be confusing, since Facebook never actually asks about your ethnicity when setting up a profile. Instead, it generates this metadata based on some kind of machine-learning process as you use the service. As is typical for Facebook, this is not only a very bad idea, it's also very badly implemented. I know this myself: Facebook thinks I have an ethnic affinity of "African-American (US)," probably because my friend group via Urban Artistry is largely black, even though I am one of the whitest people in America (there doesn't seem to be an "Ethnic Affinity: White" tag, maybe because that's considered the default setting).

Both Facebook and Rimworld are examples of programming real-world bias into technology, but of the two it's probably Facebook that bothers me more. For the game, a developer very intentionally designed this system, and is clear about his reasons for doing so. But in Facebook's case, this behavior is partially emergent, which gives it deniability. There's no source code that we can decompile, either because it's hidden away on Facebook's servers or because it's the output of a long, machine-generated process and even Facebook can't directly "read" it. The only way to discover these problems is indirectly — such as how some news organizations are trying to collect and analyze Facebook's political ads via user reporting tools.

As more and more of the world becomes data-driven, it's easy to see how emergent behavior could reinforce existing biases if not carefully checked and guarded. When the behavior in question is Pokemon Go redlining, maybe it's not such a big deal, but when it becomes housing — or job opportunities, or access/pricing for services — the effects will be far more serious. Class action lawsuits are a reasonable start, but I think it may be time to consider stronger regulation on what personal data companies can maintain and utilize (granted, I don't have any good answers as to what that regulation would look like). After all, it's pretty clear that tech won't regulate itself.

Addendum: on journalism and public policy

I'm posting this the night before Election Day in the USA. Like a lot of newsrooms, The Seattle Times has a strict non-partisan policy for its journalists, so I can tell you to vote (please do! it's important!), but I can't tell you how I think you should vote or how I voted, and I'm discouraged from taking part in public activities that would signal bias, such as caucusing for either party. It's a condition of my employment at the paper.

Here's what I think is funny: in the post above, I lay out a strong but (I think) reasoned argument for public policy around the use of personal data. While I'm not particularly well-sourced compared to the journalists at Pro Publica, I am one of the few people at the Times who can speak knowledgeably about how databases are stored and interact. I can make this argument to you, and feel like it carries some authority behind it.

But I can only make that argument because our political parties do not (yet) have any coherent preference on how personal data should be treated. If, at some point, the use of personal data was to become politicized by one side or another, I'd no longer be free to share this argument with you — the merits of my position wouldn't have changed, nor would my expertise, but I'd no longer be considered "objective" or "unbiased."

The idea that reporters do not have opinions, or can only have opinions as long as the subjects don't matter, seems self-evidently silly to me. Data privacy is a useful thought experiment: in this case, I'm safe as long as the government never gets its act together. If that seems like a terrible way to run the fourth estate — indeed, if this sounds like a recipe for the proliferation of fact-free publishing outlets encouraged by the malicious inattention of Facebook — I can't disagree. But to tie this to my original point, however this election turns out, I think a lot of journalists are going to need to ask themselves some hard questions about the value of "objectivity" in an algorithm-powered media universe.