September 9, 2016

Classless components

In early August, I delivered my talk on "custom elements in production" to the CascadiaFest crowd. We've been using these new web platform features at the Seattle Times for more than two years now, and I wanted to share the lessons we've learned, and encourage others to give them a shot. Apart from some awkward technical problems with the projector, I actually think the talk went pretty well:

One of the big changes in the web component world, which I touched on briefly, is the transition from the V0 API that originally shipped in Chrome to the V1 spec currently being finalized. For the most part, the changeover is not a difficult one: some callbacks have been renamed, and there's a new function used to register the element definition.

There is, however, one aspect of the new spec that is deeply problematic. In V0, to avoid

complicated questions around parser timing and integration, elements were only defined using

a prototype object, with the constructor handled internally and inheritance specified in the

options hash. V1 relies instead on an ES6 class definition, like so:

class CustomElement extends HTMLElement {

constructor() {

super();

}

}

customElements.define("custom-element", CustomElement);

When I wrote my presentation, I didn't think that this would be a huge problem. The conventional wisdom on classes in JavaScript is that they're just syntactic sugar for the existing prototype system — it should be possible to write a standard constructor function that's effectively identical, albeit more verbose.

The conventional wisdom, sadly, is wrong, as became clear once I started testing the V1 API currently available behind a flag in Chrome Canary. In fact, ES6 classes are not just a wrapper for prototypes: specifically, the super() call is not a straightforward translation to older inheritance models, especially when used to extend browser built-ins as it does here. No matter what workarounds I tried, Chrome's V1 custom elements implementation threw errors when passed an ES5 constructor with an otherwise valid prototype chain.

In a perfect world, we would just use the new syntax. But at the Seattle Times, we target Internet Explorer 10 and up, which doesn't support the class keyword. That means that we need to be able to write (or transpile to) an ES5 constructor that will work in both environments. Since the specification is written only in terms of classes, I did what you're supposed to do and filed a bug against the spec, asking how to write a backwards-compatible element definition.

It shouldn't surprise me, but the responses from the spec authors were wildly unhelpful. Apple's representative flounced off, insisting that it's not his job to teach people how to use new features. Google's rep closed the bug as irrelevant, stating that supporting older browsers isn't their problem.

Both of these statements are wrong, although only the second is wrong in an interesting way. Obviously, if you work on standards specifications, it is part of your job to educate developers. A spec isn't just for browsers to implement — if it were, it'd be written in a machine-readable language like WebIDL, or as a series of automated tests, not in stilted (but still recognizable) English. Indeed, the same Google representative that closed my issue previously defended the "tutorial-like" introductory sections elsewhere. Personally, I don't think a little consistency is too much to ask.

But it is the dismissal of older browsers, and the spec's responsibility to them, that I find more jarring. Obviously, a spec for a new feature needs to be free to break from the past. But a big part of the Extensible Web Manifesto, which directly references web components and custom elements, is that the platform should be explainable, and driven by feedback from real web developers. Specifically, it states:

Making new features easy to understand and polyfill introduces a virtuous cycle:

- Developers can ramp up more quickly on new APIs, providing quicker feedback to the platform while the APIs are still the most malleable.

- Mistakes in APIs can be corrected quickly by the developers who use them, and library authors who serve them, providing high-fidelity, critical feedback to browser vendors and platform designers.

- Library authors can experiment with new APIs and create more cow-paths for the platform to pave.

In the case of the V1 custom elements spec, feedback from developers is being ignored — I'm not the only person that has complained publicly about the way that the class-based definitions are a pain to use in a mixed-browser environment. But more importantly, the spec is actively hostile to polyfills in a way that the original version was not. Authors currently working to shim the V1 API into browsers have faced three problems:

- Calling super() invokes magic that's hard to reproduce in ES5, and needlessly so.

- HTMLElement isn't a callable function in older environments, and has to be awkwardly monkey-patched.

- Apple publicly opposes extending anything other than the generic HTMLElement, and has only allowed it into the spec so they can kill it later.

The end result is that you can write code that will work in old and new browsers, but it won't exactly look like real V1 code. It's not a true polyfill, more of a mini-framework that looks almost — but not exactly! — like the native API.

I find this frustrating in part for its inelegance, but more so because it fundamentally puts the lie to the principles of the extensible web. You can't claim that you're explaining the capabilities of the platform when your API is polyfill-hostile, since a polyfill is the mechanism by which we seek to explain and extend those capabilities.

More importantly, there is no surer way to slow adoption of a web feature than to artificially restrict its usage, and to refuse to educate developers on how to use it. The spec didn't have to be this way: they could detail ES5 semantics, and help people who are struggling, but they've chosen not to care. As someone who literally stood on a stage in front of hundreds of people and advocated for this feature, that's insulting.

Contrast the bullying attitude of the custom elements spec authors with the advocacy that's been done on behalf of Service Worker. You couldn't swing a dead cat in 2016 without hitting a developer advocate talking up their benefits, creating detailed demos, offering advice to people trying them out, and talking about how they gracefully degrade in older browsers. As a result, chances are good that Service Worker will ship in multiple browsers, and see widespread adoption, by the end of next year.

Meanwhile, custom elements will probably languish in relative obscurity, as they've done for many years now. It's a shame, because I'd argue that the benefits of custom elements are strong enough to justify using them even via the old V0 polyfill. I still think they're a wonderful way to build and declare UI, and we'll keep using them at the Times. But whatever wider success they achieve will be despite the spec, not because of it. It's a disgrace to the idea of an extensible web. And the authors have only themselves to blame.

August 10, 2016

RIP Chrome apps

Update: Well, that was prescient.

At least once a day, I log into the Chrome Web Store dashboard to check on support requests and see how many users I've still got. Caret has held steady for the last year or so at about 150,000 active users, give or take ten thousand, and the support and feature requests have settled into a predictable rut:

- People who can't run Caret because their version of Chrome is too old, and I've started using new ES6 features that aren't supported six browser versions back.

- People who want split-screen support, and are out of luck barring a major rewrite.

- People who don't like the built-in search/replace functionality, which makes sense, because it's honestly pretty terrible.

- People who don't like the icons, and are just going to have to get over it.

In a few cases, however, users have more interesting questions about the fundamental capabilies of developer tooling, like file system monitoring or plugging into the OS in a deeper way. And there I have bad news, because as far as I can tell, Chrome apps are no longer actively developed by the Chromium team at all, and probably never will be again.

I don't think Chrome apps are going away immediately — they're still useful and used by a lot of third-party companies — but it's pretty clear from the dev side of things that Google's heart isn't in it anymore. New APIs have ceased to roll out, and apps don't get much play at conferences. The new party line is all about progressive web apps, with browser extensions for the few cases where you need more capabilities.

Now, progressive web apps are great, and anything that moves offline applications away from a single browser and out to the wider web is a good thing. But the fact remains that while a large number of Chrome apps can become PWAs with little fuss, Caret can't. Because it interacts with the filesystem so heavily, in a way that assumes a broader ecosystem of file-based tools (like Git or Node), there's actually no path forward for it using browser-only APIs. As such, it's an interesting litmus test for just how far web apps can actually reach — not, as some people have wrongly assumed, because there's an inherent performance penalty on the web, but because of fundamental limits in the security model of the browser.

Bounding boxes

What's considered "possible" for a web app in, say, 2020? It may be easier to talk about what isn't possible, which avoids the judgment call on what is "suitable." For example, it's a safe bet that the following capabilities won't ever be added to the web, even though they've been hotly debated in and out of standards committees for years:

- Read/write file access (died when the W3C pulled the plug on the Directories part of the Filesystem API)

- Non-HTTP sockets and networking (an endless number of reasons, but mostly "routers are awful")

There are also a bunch of APIs that are in experimental stages, but which I seriously doubt will see stable deployment in multiple browsers, such as:

- Web Bluetooth (enormous security and usability issues)

- Web USB (same as Bluetooth, but with added attacks from the physical connection)

- Battery status (privacy concerns)

- Web MIDI

It's tough to get worked up about a lot of the initiatives in the second list, which mostly read as a bad case of mobile envy. There are good reasons not to let a web page have drive-by access to hardware, and who's hooking up a MIDI keyboard to a browser anyway? The physical web is a better answer to most of these problems.

When you look at both lists together, one thing is clear: Chrome apps have clearly been a testing ground for web features. Almost all the not-to-be-implemented web APIs have counterparts in Chrome apps. And in the end, the web did learn from it — mainly that even in a sandboxed, locked-down, centrally distributed environment, giving developers that much power with so little install friction could be really dangerous. Rogue extensions and apps are a serious problem for Chrome, as I can attest: about once a week, shady people e-mail me to ask if they can purchase Caret. They don't explicitly say that they're going to use it to distribute malware and takeover ads, but the subtext is pretty clear.

The great thing about the web is that it can run code without any installation step, but that's also the worst thing about it. Even as a huge fan of the platform, the idea that any of the uncountable pages I visit in any given week could access USB directly is pretty chilling, especially when combined with exploits for devices that are plugged in, like hacking a phone (a nice twist on the drive-by jailbreak of iOS 4). Access to the file system opens up an even bigger can of worms.

Basically, all the things that we want as developers are probably too dangerous to hand out to the web. I wish that weren't true, but it is.

Untrusted computing

Let's assume that all of the above is true, and the web can't safely expand for developer tools. You can still build powerful apps in a browser, they just have to be supported by a server. For example, you can use a service like Cloud 9 (now an AWS subsidiary) to work on a hosted VM. This is the revival of the thick-client model: offline capabilities in a pinch, but ultimately you're still going to need an internet connection to get work done.

In this vision, we are leaning more on the browser sandbox: creating a two-tier system with the web as a client runtime, and a native tier for more trust on the local machine. But is that true? Can the web be made safe? Is it safe now? The answer is, at best, "it depends." Every third-party embed or script exposes your users to risk — if you use an ad network, you don't have any real idea who could be reading their auth cookies or tracking their movements. The miracle of the web isn't that it is safe, it's that it manages to be useful despite how rampantly unsafe its defaults are.

So along with the shift back to thick clients has come a change in the browser vendors' attitude toward powerful API features. For example, you can no longer use geolocation or the camera/microphone in Chrome on pages that aren't served over HTTPS, with other browsers to follow. Safari already disallows third-party cookie access as a general rule. New APIs, like Service Worker, require HTTPS. And I don't think it's hard to imagine a world where an API also requires a strict Content Security Policy that bans third-party embeds altogether (another place where Chrome apps led the way).

The packaged app security model was that if you put these safeguards into place and verified the package contents, you could trust the code to access additional capabilities. But trusting the client was a mistake when people were writing Quakebots, and it stayed a mistake in the browser. In the new model, those controls are the minimum just to keep what you had. Anything extra that lives solely on the client is going to face a serious uphill battle.

Mind the gap

The longer that I work on Caret, the less I'm upset by the idea that its days are numbered. Working on a moderately-successful open source project is exhausting: people have no problems making demands, sending in random changes, or asking the same questions over and over again. It's like having a second boss, but one that doesn't pay me or offer me any opportunities for advancement. It's good for exposure, but people die from exposure.

The one regret that I will have is the loss of Caret's educational value. Since its early days, there's been a small but steady stream of e-mail from teachers who are using it in classrooms, both because Chromebooks are huge in education and because Caret provides a pretty good editor with almost no fuss (you don't even have to be signed in). If you're a student, or poor, or a poor student, it's a pretty good starter option, with no real competition for its market niche.

There are alternatives, but they tend to be online-only (like Mozilla's Thimble) or they're not Chromebook friendly (Atom) or they're completely unacceptable in a just world (Vim). And for that reason alone, I hope Chrome keeps packaged apps around, even if they refuse to spend any time improving the infrastructure. Google's not great at end-of-life maintenance, but there are a lot of people counting on this weird little ecosystem they've enabled. It would be a shame to let that die.

August 1, 2016

<slide-show>

On Thursday, I'll be giving a talk at CascadiaFest on using custom elements in production. It's kind of a sales pitch, to convince people that adopting web components is safe to do, despite the instability of the spec and the contentious politics between browsers. After all, we've been publishing with several components at the Times for almost two years now, with good results.

When I presented an early version of this at SeattleJS, I presented by scrolling through a single text file instead of slides, because I've always wanted to do that. But for Cascadia, I wanted to do something a little more special, so I built the presentation itself out of custom elements, with the goal that it would demonstrate how to write code that works with both versions of the spec. It's also meant to be a good example for someone who's just learning how web components function — I use pretty much every custom elements feature at one point or another in 300 lines of code. You can take a look at the source for it here.

There are several strategies that I ended up emphasizing while writing the <slide-show> elements, primarily the heavy use of events to tame asynchronicity. It turns out that between V0, V1, and the two major polyfills, elements and their attributes are resolved by the parser with entirely different timing. It's really important that child elements notify their parent when they upgrade, and parents shouldn't assume that children are ready at startup.

One way to deal with asynchronous upgrades is just to put all your functionality in the parent element (our <leaflet-map> does this), but I wanted to make these slides easier to extend with new types (such as text, code, or image slides). In this case, the slide show looks for a parsedContent property on the current slide, and it's the child's job to populate and update that value. An earlier version called a parseContents() method, but using properties as "duck-typing" makes it much easier to handle un-upgraded elements, and moving the responsibility to the child also greatly simplified the process of watching slide contents for changes.

A nice side effect of using live properties and events is that it "feels" a lot more like a built-in element. The modern DOM API is built on similar primitives, so writing the glue code for the UI ended up being very pleasant, and it's possible to interact using the dev tools in a natural way. I suspect that well-built component libraries in the future will be judged on how well they leverage a declarative interface to blend in with existing elements.

Ironically, between child elements and Shadow DOM, it's actually much harder to move between different polyfills than it is to write an element definition for both the new and old specifications. We've always written for Giammarchi's registerElement shim at the Times, and it was shocking for me to find out that Polymer's shim not only diverges from its counterpart, but also differs from Chrome's native implementation. Coding around these differences took a bit of effort, but it's probably work I should have done at the start, and the result is quite a bit nicer than some of the hacks I've done for the Times. I almost feel like I need to go back now and update them with what I've learned.

Writing this presentation was a good way to make sure I was current on the new spec, and I'm actually pretty happy with the way things have turned out. When WebKit started prototyping their own API, I started to get a bit nervous, but the resulting changes are relatively minor: some property names have changed, the lifecycle is ordered a bit differently, and upgrade code is called in the constructor (to encourage using the class syntax) instead of from a createdCallback() method. Most of these are positive alterations, and while there are some losses going from V0 to V1 (no is attribute to subclass arbitrary elements), they're not dealbreakers. Overall, I'm more optimistic about the future of web components than I have in quite a while, and I'm looking forward to telling people about it at Cascadia!

May 10, 2016

Behind the Times

The paper recently launched a new native app. I can't say I'm thrilled about that, but nobody made me CEO. Still, the technical approach it takes is "interesting:" its backing API converts articles into a linear stream of blocks, each of which is then hand-rendered in the app. That's the plan, at least: at this time, it doesn't support non-text inline content at all. As a result, a lot of our more creative digital content doesn't appear in the app, or is distorted when it does appear.

The justification given for this decision was speed, with the implicit statement being that a webview would be inherently too slow to use. But is that true? I can't resist a challenge, and it seemed like a great opportunity to test out some new web features I haven't used much, so I decided to try building a client. You can find the code here. It's currently structured as a Chrome app, but that's just to get around the CORS limit since our API doesn't have the Access-Control-Allow-Origin headers added.

The app uses a technique that's been popularized by Nolan Lawson's Pokedex.org, in which almost all of the time-consuming code runs in a Web Worker, and the main thread just handles capturing UI events and re-rendering. I started out with the worker process handling network and caching in IndexedDB (the poor man's Service Worker), and then expanded it to do HTML sanitization as well. There's probably other stuff I could move in, but honestly I think it's at a good balance now.

By putting all this stuff into a second script that runs independently, it frees up the browser to maintain a smooth frame rate in animations and UI response. It's not just the fact that I'm doing work elsewhere, but also that there's hardly any garbage collection on the main thread, which means no halting while the JavaScript VM cleans up. I thought building an app this way would be difficult, but it turns out to be mostly similar to writing any page that uses a lot of AJAX — structure the worker as a "server" and the patterns are pretty much the same.

The other new technology that I learned for this project is Mithril, a virtual DOM framework that my old coworkers at ArenaNet rave about. I'm not using much of its MVC architecture, but its view rendering code is great at gradually updating the page as the worker sends back new data: I can generate the initial article list using just the titles that come from one network endpoint, and then add the thumbnails that I get from a second, lower-priority request. Readers get a faster feed of stories, and I don't have to manually synchronize the DOM with the new data.

The metrics from this version of the app are (unsurprisingly) pretty good! The biggest slowdown is the network, which would also be a problem in native code: loading the article list for a section requires one request to get the article IDs, and then one request for each article in that section (up to 21 in total). That takes a while — about a second, on average. On the other hand, it means we have every article cached by the time that the user can choose something to read, which cuts the time for requesting and loading an individual article hovers around 150ms on my Chromebook.

That's not to say that there aren't problems, although I think they're manageable. For one thing, the worker and app bundles are way too big right now (700KB and 200KB, respectively), in part because they're pulling in a bunch of big NPM modules to do their processing. These should be lazy-loaded for speed as much as possible: we don't need HTML parsing right away, for example, which would cut a good 500KB off of the worker's initial size. Every kilobyte of script is roughly 1ms of load time on a mobile device, so spreading that out will drastically speed up the app's startup time.

As an interesting side note, we could cut almost all that weight entirely if the document.implementation object was available in Web Workers. Weir, for example, does all its parsing and sanitization in an inert document. Unfortunately, the DOM isn't thread-safe, so nothing related to document is available outside the main process, and I suspect a serious sanitization pass would blow past our frame budget anyway. Oh well: htmlparser2 and friends it is.

Ironically, the other big issue is mostly a result of packaging this up as a Chrome app. While that lets me talk to the CMS without having CORS support, it also comes with a fearsome content security policy. The app shell can't directly load images or fonts from the network, so we have to load article thumbnails through JavaScript manually instead. Within Chrome's <webview> tag, we have the opposite problem: the webview can't load anything from the app, and it has a weird protocol location when loaded from a data URL, so all relative links have to be rewritten. It's not insurmountable, but you have to be pretty comfortable with the way browsers work to figure it out, and the debugging can get a little hairy.

So there you have it: a web app that performs like native, but includes support for features like DocumentCloud embeds or interactive HTML graphs. At the very least, I think you could use this to advocate for a hybrid native/web client on your news site. But there's a strong argument to be made that this could be your only app: add a Service Worker and (in Chrome and Firefox) it could load instantly and work offline after the first visit. It would even get a home screen icon and push notification support. I think the possibilities for progressive web apps in the news industry are really exciting, and building this client makes me think it's doable without a huge amount of extra work.

April 15, 2016

Calculated Amalgamation

In a fit of nostalgia, I've been trying to get my hands on a TI-82 calculator for a few weeks now. TI BASIC was probably the first programming language in which I actually wrote significant amounts of code: although a few years later I'd start working in C for PalmOS and Windows CE, I have a lot of memories of trying to squeeze programs for speed and size during slow class periods. While I keep checking Goodwill for spares, there are plenty of TI calculator emulation apps, so I grabbed one and loaded up a TI-82 ROM to see what I've retained.

Actually, TI BASIC is really weird. Things I had forgotten:

- You can type in all-caps text if you want, but most of the time you don't, because all of the programming keywords (If, Else, While, etc.) are actually single "character" glyphs that you insert from a menu.

- In fact, pretty much the only code that's typed manually are variable names, of which you get 26 (one for each letter). There are also six arrays (max length 99), five two-dimensional matrices (limited by memory), and a handful of state variables you can abuse if you really need more. Everything is global.

- Variables aren't stored using =, which is reserved for testing, but with a left-to-right arrow operator: value → dest I imagine this clears up a lot of ambiguity in the parser.

- Of course, if you're processing data linearly, you can do a lot without explicit variables, because the result of any statement gets stored in Ans. So you can chain a lot of operations together as long as you just keep operating on the output of the previous line.

- There's no debugger, but you can hit the On key to break at any time, and either quit or jump to the current line.

- You can call other programs and they do return after calling, but there are no function definitions or return values other than Ans (remember, everything is global). There is GOTO, but it apparently causes memory leaks when used (thanks, Dijkstra!).

I'd romanticized it over time — the self-contained hardware, the variable-juggling, the 1-bit graphics on a 96x64 screen. Even today, I'm kind of bizarrely fascinated by this environment, which feels like the world's most cumbersome register VM. But loading up the emulator, it's obvious why I never actually finished any of my projects: TI BASIC is legitimately a terrible way to work.

In retrospect, it's obviously a scripting language for a plotting library, and not the game development environment I wanted it to be when I was trying to build Wolf3D clones. You're supposed to write simple macros in TI BASIC, not full-sized applications. But as a bored kid, it was a great playground, and the limitations of the platform (including its molasses-slow interpreter) made simple problems into brainteasers (it's almost literally the challenge behind TIS-100).

These days, the kids have it way better than I did. A micro:bit is cheaper and syncs with a phone or computer. A Raspberry Pi is a real computer of its own, as is the average smartphone. And a laptop or Chromebook with a browser is miles more productive than a TI-82 could ever be. On the other hand, they probably can't sneak any of those into their trig classes and get away with it. And maybe that's for the best — look how I turned out!

March 22, 2016

ES6 in anger

One of the (many) advantages of running Seattle Times interactives on an entirely different tech stack from the rest of the paper is that we can use new web features as quickly as we can train ourselves on them. And because each news app ships with an isolated set of dependencies, it's easy to experiment. We've been using a lot of new ES6 features as standard for more than a year now, and I think it's a good chance to talk about how to use them effectively.

The Good

Surprisingly (to me at least), the single most useful ES6 feature has been arrow functions. The key to using them well is to restrict them only to one-liners, which you'd think would limit their usefulness. Instead, it frees you up to write much more readable JavaScript, especially in array processing. As soon as it breaks to a second line (or seems like it might do so in the future), I switch to writing regular function statements.

//It's easy to filter and map:

var result = list.filter(d => d.id).map(d => d.value);

//Better querySelectorAll with the spread operator:

var $ = s => [...document.querySelectorAll(s)];

//Fast event logging:

map.on("click", e => console.log(e.latlng);

//Better styling with template strings:

var translate = (x, y) => `translate(${x}px, ${y}px);`;

Template strings are the second biggest win, especially as above, where they're combined with arrow functions to create text snippets. Having a multiline string in JS is very useful, and being able to insert arbitrary values makes building dynamic popups or CSS styles enormously simpler. I love writing template strings for quick chunks of templating, or embedding readable SQL in my Node apps.

Despite the name, template strings aren't real templates: they can't handle loops, they don't really do interpolation, and the interface for using "tagged" strings is cumbersome. If you're writing very long template strings (say, more than five lines), it's probably a sign that you need to switch to something like Handlebars or EJS. I have yet to see a "templating language" built on tagged strings that didn't seem like a wildly frustrating experience, and despite the industry's shift toward embedded DSLs like React's JSX, there is a benefit to keeping different types of code in different files (if only for widespread syntax highlighting).

The last feature I've really embraced is destructuring and object literals. They're mostly valuable for cleanup, since all they do is cut down on repetition. But they're pleasant to use, especially when parsing text and interacting with CommonJS modules.

//Splitting dates is much nicer now:

var [year, month, day] = dateString.split(/\/|-/);

//Or getting substrings out of a regex match:

var re = /(\w{3})mlb_(\w{3})mlb_(\d+)/;

var [match, away, home, index] = gameString.match(re);

//Exporting from a module can be simpler:

var x = "a";

var y = "b";

module.exports = { x, y };

//And imports are cleaner:

var { x } = require("module");

The bad

I've tried to like ES6 classes and modules, and it's possible that one day they're going to be really great, but right now they're not terribly friendly. Classes are just syntactic sugar around ES5 prototypes — although they look like Java-esque class statements, they're still going to act in surprising ways for developers who are used to traditional inheritance. And for JavaScript programmers who understand how the language actually works, class definitions boast a weird, comma-less syntax that's sort of like the new object literal syntax, but far enough off that it keeps tripping me up.

The turning point for the new class keyword will be when the related, un-polyfillable features make their way into browsers — I'm thinking mainly of the new Symbols that serve as feature flags and the ability to extend Array and other built-ins. Until that time, I don't really see the appeal, but on the other hand I've developed a general aversion to traditional object-oriented programming, so I'm probably not the best person to ask.

Modules also have some nice features from a technical standpoint, but there's just no reason to use them over CommonJS right now, especially since we're already compiling our applications during the build process (and you have to do that, because browser support is basically nil). The parts that are really interesting to me about the module system — namely, the configurable loader system — aren't even fully specified yet.

New discoveries

Most of what we use on the Times' interactive team is restricted to portions of ES6 that can be transpiled by Babel, so there are a lot of features (proxies, for example) that I don't have any experience using. In a Node environment, however, I've had a chance to use some of those features on the server. When I was writing our MLB scraper, I took the opportunity to try out generators for the first time.

Generators are borrowed liberally from Python, and they're basically constructors for custom iterable sequences. You can use them to make normal objects respond to language-level iteration (i.e., for ... of and the spread operator), but you can also define sequences that don't correspond to anything in particular. In my case, I created a generator for the calendar months that the scraper loads from the API, which (when hooked up to the command line flags) lets users restart an MLB download from a later time period:

//feed this a starting year and month

var monthGen = function*(year, month) {

while (year < 2016) {

yield { year, month };

month++;

if (month > 12) {

month = 1;

year++;

}

}

};

//generate a sequence from 2008 to 2016

var months = [...monthGen(2008, 1)];

That's a really nice code pattern for creating arbitrary lists, and it opens up a lot of doors for JavaScript developers. I've been reading and writing a bit more Python lately, and it's been amazing to see how much a simple pattern like this, applied language-wide, can really contribute to its ergonomics. Instead of the Stream object that's common in Node, Python often uses generators and iteration for common tasks, like reading a file line-by-line or processing a data pipeline. As a result, I suspect most new Python programmers need to survey a lot less intellectual surface area to get up and running, even while the guts underneath are probably less elegant for advanced users.

It surprised me that I was so impressed with generators, since I haven't particularly liked Python very much in the past. But in reading the Cookbook to prep for a UW class in Python, I've realized that the two languages are actually closer together than I'd thought, and getting closer. Python's class implementation is actually prototypical behind the scenes, and its use of duck typing for built-in language features (such as the with statement) bears a strong resemblance to the work being done on JavaScript Promises (a.k.a. "then-ables") and iterator protocols.

It's easy to be resistant to change, and especially when it's at the level of a language (computer or otherwise). I've been critical of a lot of the decisions made in ES6 in the past, but these are positive additions on the whole. It's also exciting, as someone who has been working in JavaScript at a deep level, to find that it has new tricks, and to stretch my brain a little integrating them into my vocabulary. It's good for all of us to be newcomers every so often, so that we don't get too full of ourselves.

December 28, 2015

Let's not

Right now you can access my portfolio over a secure, encrypted connection, thanks to Let's Encrypt. Which is pretty cool! On the other hand, if nginx restarts this week, it'll probably crash on a bad config value, temporarily disabling all my public-facing websites. This has been emblematic of my HTTPS experience in general: a mix of triumphs and severe configuration mishaps.

A little background: in order to serve a website over a secure connection, you need a digital certificate to encrypt communication with the browser. You can generate these certificates yourself, but that's really only good for personal use. The self-signed cert has to be manually installed on each machine that accesses the server, otherwise the browser will throw up a big, ugly warning screen. The alternative is to buy a certificate from a "trusted authority," most of which are not particularly trustworth or authoritative, but it'll get you a green lock icon in the URL bar. Purchased certs tend to be either expensive or a hassle or both.

After the Snowden leaks, there was a lot of interest in encrypting all web traffic, which meant bypassing the existing certificate authority protection racket run by Symantec et al. Mozilla and some other organizations got together and started Let's Encrypt, with the goal of making trusted certificates free and easy. I figure they're halfway there: I didn't pay anyone for the cert, at least.

There's an official client for the service, but it only works for Apache and it's kind of hefty. My server is set up in an unsupported (but still pretty standard) configuration: I run nginx as a forward proxy in front of Apache (for PHP scripts) and Node (for various apps, including Weir), both of which I'd like to be secured. So I used acme-tiny instead, which basically just talks to the cert API and is small enough that I could read and understand the whole thing. I wrote a shell script to wrap it up and automate things. Automation is important, because unlike paid certificates, these are only good for 90 days, so you need a cron job set to run every month or so to renew them.

Setting all this up wasn't an easy process. The acme-tiny script is well-written, but it has bugs on the version of Python that comes with CentOS. Then I had to set up nginx to use the certificates manually. My webmail got locked into an infinite redirect once I moved my self-signed cert out from Apache and out to the proxy. And the restart crash? Turns out that Let's Encrypt is rate-limited on a per-domain basis, and I didn't back up the current certificate before I hit the rate limit, so my update script overwrote it with an empty version. Luckily, nginx caches certs and won't restart if it detects a bad config, so I'm safe as long as it can outlast the seven-day rate-limit window (it probably will: it's been up 333 days so far, after all).

Without literally years of server admin experience, I'm not sure I would have made it through these issues. And as I mentioned, my system is pretty standard — there's no load-balancer, no CDN, and I don't need to host third-party content. I also don't have any business that gets lost if anything is busted and the certificate expires in March. If I were, say, an IT department responsible for a high-traffic site, I'd be a lot more cautious about moving everything over to HTTPS, either through Let's Encrypt or a paid option.

Ultimately, the news industry and other sites are going to have to follow the lead of the Washington Post, even if the timeframe takes a while. Even apart from the security benefits it carries, browsers have locked new features (Service Worker, for example) behind HTTPS, and are moving old features behind it as well (geolocation is going to be the biggest disruption there). If you want to develop fast websites in the future (assuming that's something news product management cares about, which is... questionable), and especially if you want to create rich news applications, you're going to have to be encrypted.

In my case, I wanted to get a head start on developing with new browser features (a Service Worker would clean up a lot of Weir code), so it's worth the hassle. And we will continue to push these boundaries on the Seattle Times interactives team, since we've moved our S3 hosting to HTTPS (the rest of the site will follow eventually).

But I think there's a lot of tension between where we want to be, as a news industry, and where it's possible for us to be right now. Although I've seen people calling for incentives to change it (such as requiring HTTPS for news grants), the truth is that it just isn't that simple. News sites are often built in a baroque, overcomplicated set of layers — the Seattle Times, for example, currently sits behind a CDN, several instances of Varnish, some reverse proxies, and a load balancer, mostly due to a lot of historical baggage. Changing this to run securely is going to be a big process, even for a company of our size (maybe because of our size). I can't imagine the hassle for local papers that might have little or no IT support. It won't happen overnight, and Let's Encrypt hasn't done anything to change that yet.

In the meantime, I think it's worth stepping back and asking what we really want out of a digital news industry, because sometimes it's hard to maintain perspective from in the trenches. Is it important that readers be able to see our sites securely, free from worries that third parties are snooping or altering what they see? Sure, that's important. Is it in the top three things that Americans need from local news, above problems like "a sustainable revenue model" and "a CMS that doesn't actively fight against the newsroom?" Probably not. Given a choice between a cryptographically-secure media and a diverse, sustainably-funded media, I'm personally going to take the latter every time.

December 7, 2015

How We're Fast

Over the Thanksgiving holiday, when I wasn't busily digesting as much cornbread stuffing as I could eat, I spent some time running WebPageTest against various projects that the Seattle Times Interactive team has built. The news industry as a whole may not care about speed, but I do, and I want our pages as fast as possible — especially the ones that are embedded in the regular CMS via responsive frames.

After all the testing, I'm generally pleased by how our stuff stacks up, especially when compared against the rest of the site. We have some advantages, of course: our pages typically have fewer ads, and we can strip down the page for maximum efficiency. But it's also the result of a lot of hard work on our news app template, ensuring that every project comes with smart decisions built in. I genuinely think that all news pages could be this fast, so it's worth talking about how we've made it happen, especially for other news organizations that use a similar flat-file approach to their interactives.

Browserify with care

We use Browserify to package up our JavaScript, because we're not savages, and you need some sort of module system for JavaScript these days. Browserify builds all our scripts into a single file, which is important for high-latency connections (which means most cellular networks, even on 4G). We also make sure to load that bundle file with the async attribute at the bottom of the page, so that it won't block rendering.

All of that is pretty standard best practice, but we've also learned that Browserify can be dangerous if you're not careful. A lot of NPM modules are published with the unminified, debug version of the library as the default export from the module. Angular in particular is bad about this: running require("angular") on its own will load a file filled with comments and documentation, totalling more than a megabyte in size (even after gzip, it's still more than 200KB). That's huge!

As a result, one of our production checklist items is to make sure that we are loading the minified version of any external libraries. We also use the browser property in our package.json file to alias common libraries to their minified versions, so that when we require Angular, jQuery, or Leaflet, it automatically defaults to the smallest file.

Gzip on S3

Like a lot of newsroom developers, my team hosts files on Amazon S3, mostly because it's cheap and reliable. People like to think about S3 as though it's just a normal, heirarchical flat-file server, like Apache or Nginx, but it's not. S3 is really a key-value store: you put in a path, and it spits back a prerecorded reply, including the headers.

If you think of S3 as a server, you'll expect it to do a bunch of things that it doesn't actually do. For example, it doesn't set a cache expiration date, and it doesn't know about content types. It also doesn't understand about Gzip compression, so it'll merrily serve your files in their uncompressed form, making them way bigger than they need to be, even if the browser requests the compressed version.

We get around this by running a compression stage on any text-based file during deployment, and setting the headers for the stored object to match. This does mean that theoretically, a browser that doesn't support Gzip will be unable to request that content, because S3 will always respond with compressed content no matter what Accepts-Encoding header the browser sends. Luckily, every browser since IE4 supports it.

Reduce framework code

I love Angular. If you want to quickly generate a visualization with powerful tools for filtering and data binding, you can't do much better. I personally think it's an order of magnitude better than D3. But Angular can also be brutally slow: its change detection algorithm requires a lot of time and memory as a tradeoff for developer convenience.

On a recent project that looked at animal imports, we started with Angular as a way to test out the visualization, but soon noticed that it was taking three or four seconds just to parse and apply the data. On a desktop, that time is a drag. On mobile, it's likely to get the tab terminated, or convince readers that there's something wrong with it.

When the profiler says that you're spending that much time in JavaScript, there are two options. The first is to try to find ways to work around the framework, which can range from unpleasant to actively painful. The second is to just rewrite in vanilla JS. It sounds more difficult to do the rewrite, but if all you're doing is data-binding and events, you can usually replace it pretty easily with a little templating and some custom data attributes. The resulting code isn't as clean or simple, but in the case of the animal imports, it dropped our JS execution time to under 100ms. That's fast.

Even jQuery can be optional these days. Because we compile ES6 down with Babel, a lot of DOM code that would be ungainly can become elegant. Template strings and arrow functions alone have allowed us to cut out DOM libraries entirely, and as a result many of our interactives consist of no external libraries at all. If you haven't checked into the advantages of using Babel in your build process, it's well worth another look.

Reduce third-party code

The number one contributor to page load time is not written by journalists: it's the third-party ad code that runs on the page. There may be only so much you can do about this, since it pays the bills, and of course it may not even apply on embedded graphics. But on our standalone pages, I've taken a strong stance on implementing all code ourselves whenever possible. For example, although our commenting system usually requires multiple scripts loaded synchronously, I wrote a loader that runs through and adds them asynchronously, and only after a user clicks on the "view comments" banner. We can't avoid the hit, but we can delay it until well after the rest of the page has had a chance to render.

Lazy-load everything

Once you've delayed scripts with the async attribute, trimmed the size of those scripts and compressed them, and deferred as much third-party code as you can, what's left over? In our case, this is where we start getting into the structure of the actual interactive, and how it loads itself. For most interactives, we embed data directly into the page, but beyond a certain size it becomes worthwhile to grab it via AJAX instead.

But there's another way to think about lazy-loading, and that's to consider what format you're actually using to populate the page. I'm as big a fan of progressive enhancement as anyone else, but in the case of my team, what we produce is interactive — there's literally no point if JavaScript is disabled. I've found that moving content into JSON and then templating it onto the page can reduce download times significantly, while the speed hit is negligible. Finding the balance between network speed and JavaScript execution time is a constant process for us.

When performance matters

Finally, a note of caution: as much fun as it is to squeeze every last millisecond out of the browser, I'm a little uncomfortable making it the alpha and omega of the job. Ultimately, our goal is to inform people — we'd like that to be fast, but a fast page with bad or misleading reporting is still a failure.

What I like about front-end speed is that it serves as a useful proxy for site quality. A site that's fast can't load too many ads. It can't serve too many tracking scripts. It has to put the reader first. It's easy, much of the time, to chip away at performance in the name of business metrics: loading an additional analytics script to get more information, or an obnoxious ad for a short-term revenue boost.

But if you put speed first, every decision has to start from the perspective of "what's good for the reader?" It's hard to measure the impact of good journalism, but we can have metrics for speed and other technical aspects of the presentation. We can spend more time on the former if we have strong, user-centric guidelines on the latter. If we want people to give us money over the long term, that seems like the only healthy strategy to me.

November 20, 2015

Weir, year two

I realized the other day that Google Reader shut down in June of 2013, which means I've been using Weir as my RSS reader for more than two years now. It's my longest-running software project, and still one of the most complex things I've built in Node. And apart from occasional revisions, it's been up and running constantly, in mobile and desktop browsers, that entire time.

I don't log out a lot of metrics from Weir, so there's a lot of stuff that I'm not tracking. But I can say that there are currently 113 subscriptions, with around 6,000 stories in the database. The server that hosts the app (as well as my various domains) downloads about 20GB of data each month, most of which is probably Weir (the rest is e-mail and server updates, and I'm frankly not that popular). It also hovers around 10% of available memory, which is pretty good for a garbage-collected language on a piddly little VM.

On the client side of things, the Angular code has definitely started to show its age. This was the project that I used to learn Angular, and since then I've learned a lot about the framework. Would I use it if I were writing Weir from scratch? I'm not sure. I still love the databinding aspect of Angular, but I suspect I could write a smaller, nimbler version of the UI in vanilla JavaScript pretty easily. At some point, I may give it a shot: the server API is clean enough that writing a new client should be relatively straightforward.

As an experiment in self-hosting a cloud service, Weir is a mixed success. But I have grown to love the way that something I wrote has become a fixture of my life. I clear out my stream on the bus in the morning. Throughout the day, Weir's purple tab icon lights up to let me know that new items are available. It feels like wearing clothes that I tailored for myself — using it feels a little nicer than it should, just from the pride in its construction.

November 4, 2015

Closing the textbook

Last week, when the administration sent out their quarterly "please someone cover these classes, we are very desparate" email, I put in my notice at Seattle Central College (how's that for irony?). I'll be finishing up this quarter teaching ITC 210, and then I'll need to find a new way to occupy 10-20 hours a week. For a start, I'm planning to volunteer for the local Girl Develop It organization as a TA. I'll be able to cook for Belle more often. And I'd like to be more active in managing the local Hacks/Hackers chapter that I took over earlier this year.

SCC does have some deep organizational problems, and I won't pretend they haven't influenced my decision to leave. But I don't regret the time I spent there: there's been little as rewarding as seeing people take the information I can give them and really run with it. Teaching has often pushed me to make sure that I knew every detail of a subject so that I wouldn't mislead students, and it's gotten me to explore new workflows and clarify my thinking on a lot of topics.

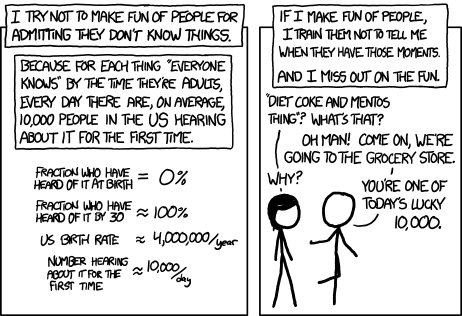

The most important thing I've learned isn't anything technical. Early on in my time as an instructor, I would often be surprised when students wouldn't know something basic, even though it might have been in the prerequisites (only later would I find out how porous those prereqs are at SCC). After a little while, I made a conscious decision that my reaction should be enthusiasm instead of surprise. Although I'm not a huge fan of XKCD, I was inspired by this comic:

Approaching ignorance not as a character flaw or personal failing, but as a chance to share something cool, was great for students. It provided a perspective from which basic questions can turn into an enthusiastic deep-dive into a topic — something even advanced students might find valuable. And it kept me engaged far longer than I think I could have managed in a curriculum where the opportunities to teach really high-level, interesting techniques just weren't there.

Although it seems a bit like pablum, and deeply out of character for a cynic like me, I actually believe that enthusiasm will continue to be useful, even when I'm not teaching regularly. After all, I work on the bleeding edge of an industry that's still struggling to figure out the Internet: the least I can do is be positive about it, for my sake if not for theirs.