September 21, 2021

I am FM

My last day at NPR was September 3, and I started at Chalkbeat on September 13. In the nine days in between, I tried to detox: I stayed away from the news, played a lot of Castlevania, and — in an effort to not feel completely useless — worked on a project I've been meaning to tackle for a while: I wrote a browser-based FM synth modeled on the classic Yamaha DX-7.

The DX-7 is the classic Lament Configuration of digital sound design. It's not only based on a model of synthesis that's unintuitive, but Yamaha wrapped it in a pushbutton user interface that discourages experimentation. Its sound defined an era almost entirely through the presets: the piano arpeggios from Twin Peaks, countless Whitney Houston ballads, and the bass line from Take on Me. I never owned a genuine DX-7, but I had one of Yamaha's budget models, and learning to build sounds on it was a long-standing white whale of mine.

Modulation Operations

Most synthesizers are what we call additive and subtractive. You generate a waveform, either by combining different wave shapes (sine, rectangle, sawtooth, or triangle) or using a noise generator, and then patch that through a series of filters and effects, and out the other end either emerges a transcendant reinterpretation of Bach (if you're Wendy Carlos) or a kind of deranged squawking (if you're me). This kind of synthesis isn't easy, per se, but it makes sense to someone who has used, say, a guitar pedalboard.

The DX-7 works differently, using something called frequency modulation (FM) synthesis. Essentially, it uses up to six sine wave oscillator units (called "operators"), but most of them aren't audible at any given time. Instead, the secondary units (called "modulators") are used to tweak the frequency of the audible operators ("carriers"). When the wave output of the modulator goes up, so does the frequency of its carrier. When the wave goes down, the freqency dips. Since these changes in frequency happen many times a second, and are often scaled to the input pitch from the keyboard, the result are complicated harmonic patterns, often described as metallic, bell-like, percussive.

In the original DX-7 hardware, this is all done using a polynomial math equation, effectively shifting the sample location for the carrier wave based on the modulator value (there's a useful .gif at Wikipedia illustrating the principle). You can do this with relatively cheap processors, and indeed that's how most of the JavaScript implementations still do it: they generate a stream of audio data directly from the phase math, and pipe that to an output. But for the sake of prototyping, I decided to do it a different way, using the native WebAudio processing graph.

Adapting theory to practice

WebAudio is a kind of beautiful monstrosity. It's easy to imagine an API designer deciding that browser audio should be mostly WebGL-style primitives, basically just handing you an audio buffer array and leaving the sound generation up to you. Or the pendulum could have swung the other way, toward extreme user-friendliness, with just a slightly more performant version of the <audio> tag letting you load and trigger preset clips.

Instead, the final API ends up looking more akin to a classic Moog patchbay or a studio effects rack, letting you wire various modules together into a complex signal chain. Those nodes start out as simple oscillators and gain amplifiers, but from there it gets pretty batteries-included: nodes for impulse convolution, multiple shaped filters, and compression, plus a custom "script worker" node as an escape hatch.

Crucially for my purposes, WebAudio signal nodes can be wired to more than just audio inputs and outputs. You can also hook nodes into the control parameters, so that the output from one changes the volume or strength of another. In our case, we can use the audio signal from our modulators and pipe it into the frequency value of our carriers. It's not quite the same as the classic DX-7 formula, but it performs very well, and the sound actually isn't that far off. You can hear the classic EPIANO1 preset adapted to my code on the GitHub demo page for the project.

However, while this implementation felt more intuitive than juggling Math.sin(), WebAudio also has some quirks that made it tricky. For example, oscillators are single-shot: they can only be started and stopped once. The API is full of this kind of design, where you're supposed to create nodes, connect them to the graph, and then throw them away. But when you have modulator oscillators feeding into carrier oscillators in a complicated web of amplifiers and filters, disposable audio sources don't really fit the design.

In the end, I had to wrap the whole thing in a disposable Voice class that encapsulates an arrangement of operators for a single note. When the synth is asked to play a sound, it creates a Voice containing a fresh set of operators, hooks that up to the audio context, and sends it on its merry way. This effectively makes our synthesizer polyphonic by default, since each individual frequency gets its own voice on demand. It feels wasteful, but it works.

Gradual complexity

Working on a project like this makes me think a lot about how it is that I build projects, and how to teach others to do the same. We often tell junior developers that they should learn by creating something fairly complex, but we don't really tell them how to do it. I suspect this is because it becomes fairly instinctual over time, so it's hard to explain.

Part of what we don't tell junior developers is that big projects are built out of little projects, one level of abstraction at a time. For example, for the Hello Operator repo, the process of getting a (mostly) working synthesizer looks like:

- Hook up some basic oscillators and trigger them on a timer

- Wrap those oscillators in an Operator class and connect them together

- Instead of using a timer, set the keyboard to trigger playback

- Wrap the Operator objects in a Voice, so that they can be played repeatedly

- Add a MIDI keyboard and feed its input directly into the synth

- Wrap MIDI in an EventTarget so it can be used for more than just notes

- Add basic inputs that tweak the Operator settings, wired directly to MIDI

- Create a bad abstraction to marry browser UI to the operator settings across multiple parameters

- Replace that abstraction with something that handles updates regardless of source, whether from the browser UI or the knobs on the MIDI controller

I suspect that when we say "build bigger projects," what people hear is that their application needs to spring fully-formed from their head like Athena, but literally nothing I've ever built has been scoped that way. It's always been a gradual accretion of functionality. Caret, for example, started out as just a text box and a keyboard input, and everything else, from tabs to project management, grew from there.

It's not that I don't have a plan at all — I knew from the start, for example, that I'd want a solid system that encapsulated the MIDI handling code and turned it into something more JavaScript-friendly — but the point of experience is learning where to put the grotesque hacks that you'll later replace with those better systems. And you get that experience by failing to make good placeholders on your first few projects.

Did I accomplish my goal of learning to program a DX-7? No. But ultimately, for these kinds of projects, that's not really the point. I learned a lot about sound, how the browser processes it, and how to handle new kinds of input. One day, I might even finish it. Brian Eno, eat your heart out.

August 3, 2021

The Mythical Document Web

Through a confluence of issues, Safari (Apple's web browser, and the only browser allowed on iOS) has been a hot topic lately:

- Multiple game streaming services have rolled out in the browser instead of through a centralized app store on iPhones, including Microsoft's Xbox Cloud. These are probably the highest profile web-only apps on iOS in years, and ironically Safari only recently became capable of hosting them.

- iOS 14.1.1 shipped with a showstopper bug in the IndexedDB API, part of a long stream of bugs that break Safari's ability to store data locally and work offline. Because browser releases are tied to the OS, developers will have to work around this for at least half a year and probably more (since many users don't upgrade promptly).

- The Safari team asked for feedback about what new features developers would like to prioritize, which reminded everyone that the existing features are largely broken and it's part of a systemic pattern of neglect and abuse. Lord knows I have my own collection of horror stories.

When these kinds of teapot tempests stir up, you can often sort the reaction from the technical community into a few buckets. At the extreme "actually, Safari is good" side, there are people who argue that the web should be replaced or downgraded into something more like Gemini, or restricted to the feature set of HTML 4 and CSS 2 (no scripting allowed). You know: cranks.

But you'll also see a second group proposing that "browsers should be for documents, not for apps" (e.g. browser developers should just stop adding new features entirely and let's split the web in two). In this line of thinking, a browser like Safari that refuses (or is slow) to implement new APIs or features is doing the world a service, because it keeps the ecosystem tilted toward the "document" side instead of the "app" platform side, where Google has too much influence. These opinions seem more reasonable on the surface, but they're also cranks — it's just harder to explain why.

The flaw in the "document browsers, not app platforms" argument is that it assumes that web APIs can be sorted into clear, easily distinguished buckets — or indeed, that there's a bright line between the two. In fact, as someone who almost entirely builds content pages (jargon about "news apps" aside), I often find that in conversations with "app" developers that I'm more experienced with new browser APIs than they are. Most client-side apps, like GMail or Trello, do not actually use that much of a browser's API surface. Even really ambitious applications like Figma mostly just need methods for storage and display, and they've had those (through IndexedDB and canvas) for at least a decade now.

Should browsers be simpler and easier to implement? This kind of argument often feels very intuitive to the "document web" advocates, because they're used to thinking about new APIs through the context of the marketing bullet points for a new operating system. But when you actually look over a list at Can I Use, an awful lot of the "new" APIs are just paving cowpaths: they're designed to replace or reduce common patterns that developers were already hacking onto pages.

- Beacon API - lets you fire a request at a server without waiting for a response, which means that developers can stop intercepting link clicks and pausing navigation while they send an analytics ping.

- Fetch - makes it easier to safely load information from a server, replacing XMLHttpRequest (which was hard to use) and JSONP (which was a security nightmare).

- Intersection Observer - lets developers know when an element has entered or exited the visual viewport without having to poll constantly, which means scrolling gets smoother.

- Web Crypto - keeps people from shipping huge crypto libraries as a part of their JS bundle, and supports privacy-first features like end-to-end encryption.

- Web Assembly - creates a stable compilation target for other languages. Developers were already creating other languages that compile to JavaScript, Web Assembly just creates a standard interface and a predictable performance profile.

- Web Sockets - replaces previous methods of getting fast updates on events, such as constant polling requests or persistent server connections that would take down Apache.

- Various message channels - lets developers communicate between tabs without abusing sidechannels like window.name or local storage, useful for all the people who have GMail open in seven tabs because they never close anything.

- Grid and flex layouts - replaces various hacks and JavaScript-based layout systems, including the holy grail: vertically-centered content.

Because JavaScript is a Turing-complete language and web browsers were originally designed with lots of holes in them, none of these APIs are really adding anything new to the browser — it's just that previously, this functionality would have been added by brute force. For example, before browsers created consistent ways to autoplay video without loading a large and dithered .gif file, there were scripts to "play" frames via canvas and a tiled .jpg. You'd be amazed the hacks like this I've seen (and some I've perpetrated).

Are there APIs in Chrome that cross into traditional native app territory? Sure, there's a few, like the Bluetooth or USB access APIs. But while pundits and native developers seem to think those are the vast majority of new browser features, I think it's clear from the listings (and my own experience) that those don't actually represent very much usage in modern apps (they're only about 1/10 of the items on the Can I Use index of JS APIs). They're certainly not what most people complaining about Safari are actually talking about.

What's especially jarring for me, as a visual journalist, is that the same people who rail against the complexity of the web platform will often praise the interactive stories from teams like mine. While I appreciate the support, I can't help but feel that they think our work is less technically challenging or innovative than a "real" developer's, and that they're happy to have a browser push the envelope only as long as it doesn't pose any competition to Apple's revenue stream.

In contrast, if you look at something like my parents' hometown paper (with an ad-blocker, of course), it's not far off from the "document web" ideal — and it looks unbelievably quaint. Despite the warm glow of nostalgia around "the old web" when men were men, browsers were small, and pages were laid out in tables, actually returning to that standard would feel like trying to use DOS for a day: clumsy, slow, and ugly.

That's why when someone says "browsers should be for pages, not for apps," we should ask specifically what they mean by that:

- Do they mean physically handing Word files around, like we did before Google Docs? Can anyone imagine going back to a native office suite for any kind of collaboration?

- Are slippy maps okay, or should we go back to the Mapquest experience of clicking a little arrow and waiting for the page to reload in order to see a little more to the east?

- Do you want responsive charts in your news articles, so that they're legible on any device? Think of all the COVID explainers and election results from the past year — should all those have been rejected for being "too app-like?"

- Should a person be able to check their e-mail from any computer, or should they have to install a dedicated native client and remember all their server details?

- Think about all the infrequent tasks you do online, and now imagine that they're all either regressed to the 1998 version or built as native code. Do you think you should have to install an app just to book a flight? To buy a book? To find a new job?

(Incidentally, it's wild how much the mobile market has been distorted on these issues: I think most people would consider it a total non-starter to need to install a desktop app to read Facebook or stream a TV show, but Apple has worked very hard to protect their platform from browser-based options on mobile.)

I think it's possible for someone to look at that list and still insist that yes, they want browsers to be Gopher clients with slightly better font choices. I personally doubt it, though — I suspect most people making the case for a "document-first" web aren't irrational, they just like the romance of the idea and haven't fully thought it through. I sympathize! That doesn't mean we have to take them seriously.

June 18, 2021

Upstream

In 2013, Google decided to shut down Google Reader, one of a number of boneheaded decisions that the company undertook in pursuit of some bizarre competition with Facebook. At the time, I decided to try an experiment: I'd write my own RSS reader, try it for a few months, and if it didn't work out I'd switch to one of the corporate replacement options.

Eight years later, I still use Weir to keep up with various feeds, blogs, and news updates. It's a deeply personal piece of software — the project that made me fall in love with the idea of code tools that are crafted just for a single person, like making your own workbench or sewing your own clothes.

But I also haven't substantially upgraded or altered Weir in all that time, even as I've learned a lot about developing on the web. So this week, while I had the apartment to myself, I decided to experiment again and build a new client (while mostly leaving the server alone). After I get a chance to work out any remaining kinks, I'll move it over to become the new built-in UI for the application.

I love my curvy UI

The original client was written in Angular 1 as a learning project. It's fine! It's mostly fine. The main problem that Angular had — and which other front-end frameworks have inherited — is that it wanted you to do all your work at a level of abstraction from the DOM, and any problems that couldn't be cleanly moved into the state object would get messy. Browsers were also worse in those days: no intersection observers for handling scroll positioning, inconsistent event handling, no support for easy concurrency with async/await. So there's some awkward behavior in the original client that never felt like it would be easy to fix, because it required crossing that abstraction barrier.

Unsurprisingly, for the rewrite I organized the code via web components — extended from the same base class that I used for Radio, and coordinated over a central event bus similar to the command system in Caret. The only code that translated over mostly unchanged was the sanitization module, which loads each post body into an inert document and processes it to remove ads, custom styles, class names, and anything else that isn't plain HTML content.

What is surprising is that the two codebases are not notably different in size — in fact, CLOC gives roughly the same line counts between the two. Of course, that only includes code I wrote. The original Weir client also requires 80KB of Angular runtime code, which has to be downloaded, parsed, compiled, and run before any of my code shows up onscreen. I'm using those precious first-paint seconds to indulge in a build-free workflow — all JS is just loaded as raw ES modules, and components fetch their styles and markup from individual HTML files instead of using Less and Browserify. It all evens out, but if I decide I'm tired of paying a startup penalty, it's certainly easy enough to add Rollup to the process.

Typically when I go framework-less, the thing I miss most is iteration in templates. It's still a little clumsy in the new client code. But combining Element.replaceChildren(), shadow DOM slots, and elements that act as template partials, it's honestly much less of an issue these days. I could add a databinding function to diff and transition elements, as Radio does for its sorted podcast lists, but (other than the feed management table) there's almost no part of the UI here where view data persists between state transitions, so it's not really worth the effort.

Scrolls like the Dead Sea

Instead of using a stack of full-window UI "scenes" for different tasks within the UI (such as settings, feed management, and reading stories), the new client is organized in three columns (admin, story listings, and reader). On desktop, they line up side-by-side across the window, and on mobile each one takes up the whole screen, similar to something like Tweetdeck or Mastodon. CSS scroll snap makes it easy to swipe between them horizontally or scroll vertically within the individual panels as their content requires. In practice this gives us a native-feeling, responsive UI pattern with no JavaScript, and it will feel more natural when snap stop is supported to prevent overscroll.

Desktop view: three columns in a row

Mobile: swipeable columns (artist's rendering)

Unfortunately, creating a mobile UI that scrolls in two directions like this means that viewport management is more difficult to handle programmatically. For example, when loading a story into the reader panel, we want to scroll smoothly over to that column from the story list, while immediately jumping within the reader content to the top of the story. In contrast, the story list should scroll smoothly both for its contents (when you use the keyboard shortcuts to select the next item in the list) and when it becomes the primary view on mobile (say, if you reach the end of all unread stories).

Ultimately, the solution was to split scrolling into separate code paths, depending on whether we want to move between columns, or within them. The code still uses scrollIntoView for panel transitions, and modules send a request over the global event bus if they want a different view to take over. The panels themselves are shell custom elements that offer individual control for scrolling content separately from the main viewport — the reader and story list dispatch DOM events up the tree to the ancestor panel when they need their column to scroll vertically to a certain element or offset, with or without an animated transition.

Promises, promises

At the start of the process, I didn't intend to do anything to the server side of Weir. It had already been built to handle cross-domain requests, so I didn't need to change anything for local development, and while it has its quirks, I'm generally pretty happy with how it works. Then I hit a snag: the "mark all as read" API route returns a count of stories that were updated, but not the new unread/total story counts. It was just irritating enough that I decided to dig in and make one little change. Of course it snowballed from there.

Since it was as much a learning experience as it was a legit project, Weir doesn't use a typical Node library for setting up its API. I wrote my own request handler and router on top of the basic HTTP module. That part of the code has actually aged pretty well. However, to manage the async chains involved in making database calls and RSS fetches, I wrote a utility library called Manos (because you're putting your code in the hands of fate), and that stuff was a mess.

These days, the ideal way to handle async flow is with the await keyword, so you don't have to write code out of order or in a snarl of function wrappers. But using await requires functions to return promises instead of accepting callbacks, and all of my code was written before JavaScript promises were standardized. So to make it a little easier to insert a db.getStatus() call in a single handler, I ended up converting the whole application to a promise-based flow.

Luckily, I went through a similar process a few years back with Caret, when async/await shipped in Chrome, so I largely knew what to expect. Surprisingly, the biggest change is not in the routes at all, but in the "Hound" component that periodically fetches feed items from various URLs: subscriptions have to be grouped into batches, then each batch is requested, sometimes decompressed from gzip, fed to a streaming parser, and finally saved to the database. As implemented with Manos, the code was at best out of order, and at worst involved a lot of "clever" functional tricks.

The new Hound flow has its issues — I think there's some leftover weirdness from the way old-school Node streams interact with each other that requires pausing the request as soon as it comes in — but it now reads top to bottom, and most of the complication comes from the problem domain and not the language. At some point, updating the request code to use something like fetch() will probably eliminate most of the remaining issues.

Second-system syndrome

There's a truism in development circles that a rewrite is often a debacle — people point to the rewrite of Netscape 4.0 that's blamed for tanking the company, or the Copland OS at Apple. My personal suspicion is that this is survivorship bias: Netscape itself was a from-scratch rewrite originally from the Mosaic browser, and while Copland was not a success, current Mac OS is built on the bones of NeXT, itself a from-scratch OS.

In any case, most people aren't building browsers or operating systems. For these kinds of small projects, I think there's value in taking another run at an idea, armed with knowledge about what worked or didn't work the first time around. In fact, that might be the best argument for these kinds of small projects (API clients, media players, browser extensions): they're a chance to stop, try something different, and measure our skills against our past selves. I learn a lot from these little rewrites, and I think it's safe to say that I am better at this than I was eight years ago.

I still wish they'd just bring back Reader, though.

February 15, 2021

Between Amber and Chaos

There isn't, in my opinion, a cooler name for a web standard than the Shadow DOM. The closest runner-up is probably the SubtleCrypto API, and after a decade of Bitcoin the appeal of anything with "crypto" in the name is pretty cloudy. So it's a low bar, but still: Shadow DOM. Pretty cool name.

Although I've been using web components for a long time, I've only been using Shadow DOM with it for a couple of years, in generally in pretty limited ways. For an upcoming project at NPR, I took the chance to really dig into how it's used in a mixed-content environment, one where custom elements are not just leaves of the HTML tree, but also wrap branches of extensive HTML content. The experience was pretty eye-opening, and surprisingly positive!

Walking the pattern

Let's start by talking about what what it is. Like most of the tech under the web components "brand," Shadow DOM is meant to retroactively give developers tools that "explain" what the browser already does, and hook into the same extension points. The goal is to make it possible for regular people to rapidly build out new functionality, because there's no "magic" behind the scenes.

For example, let's create a humble <select> tag:

Right off the bat, this tag has some special treatment that we can't immediately explain through regular HTML: it has a "thumb" (the arrow on the right) that doesn't appear in the DOM and can't be meaningfully styled, but is clearly a UI element that reacts to events. The options, defined as children of the tag, are still surfaced visibly, but not in the same way that children of a paragraph are or a regular text element are. Instead, they're moved to a new location in the dropdown menu and shown conditionally (or, on mobile, through an entirely different UI context).

Using our previous HTML/JS toolkit, it's not possible to duplicate these behaviors, or similar behaviors from tags like <video> or <input type="range">. To explain the "magic" of these elements we need to add Shadow DOM. It gives developers an API to attach a hidden document fragment called a "shadow root" to any given element, which replaces the visible contents of the element. However, even though they're shown to us in the browser, the contents of that document fragment are hidden from normal JavaScript queries, and its CSS styles are isolated — from the inside, you have a blank slate to work from, and from the outside it's as though that shadow content is an intrinsic part of the tag itself, just like the select box's dropdown UI.

What about those select box options, which are written as child tags but appear in a very

different way? For that, we add in a <slot> element: inside the shadow,

this element will re-parent any children placed in the host element. For example, given a

shadow-dom element with the following in its shadow root:

<b> SHADOW START </b>

<slot></slot>

<b> SHADOW END </b>

We could write this in our page as:

<shadow-dom>

<i>HELLO WORLD</i>

</shadow-dom>

The contents of the <i> element aren't shown directly. Instead, they're moved inside the slot element, meaning that the page output will read SHADOW START HELLO WORLD SHADOW END. But, and this is the cool part, that italic tag appears to scripts and dev tools as though it was just a regular child of the <shadow-dom> element — it can be styled as normal, you can query for it, attach event listeners, and edit it as normal. The bold tags, meanwhile, remain in the shadow: they're visible on the page, but they can't be accessed from scripts and their styles are completely isolated.

This, then, is how Shadow DOM "explains" how a select box works. The box itself, including the current item and the thumb UI, live in the shadow. The options you write into the tag are reparented to a slot inside the drop-down area, to be shown when you click the element. We can use this API to create self-contained UI for an application or document without having to worry about new markup or styles polluting the page.

Enter the Logrus

Not everything is rosy, of course. One long-standing complication is that custom elements can't touch their own contents or attributes during construction, for reasons that are tedious and not worth going into here, but they can attach and modify their shadow root. So it's really tempting in custom elements to do everything in a shadow, because it radically simplifies your templating. Now you have null problems. In Radio, I built the entire UI this way, which worked great until I needed to inspect an element that's inside three nested shadow roots, or if I needed to query for the current active element.

Another misunderstanding has been people thinking shadow roots can replace something like Styled Components in terms of style isolation. But Shadow DOM is more like an iframe than anything else: explicitly inherited style properties (like font family) will travel through, but otherwise it's a pretty hard barrier. If you want to provide styling hooks for a component, you need either provide preset options or document a set of CSS custom properties. More importantly, the mechanisms for injecting styles into a shadow root (typically by putting a <style> tag inside) don't play well with standard build tooling.

By contrast, actually populating Shadow DOM tends to be cumbersome without build tooling in place to help. A lot of tutorials recommend building it from an inert <template> tag, which used to be elegantly handled via HTML imports. Now that those are deprecated, you either have to place the Shadow DOM template in your page manually (no), lean into async component definition (awkward), embed the markup into your script as a big JS literal (ugly), or use a build plugin to pull strings in as needed (sigh). None of these are unworkable, or even that difficult, but none of them are nearly as nice as simply being able to define a component's styles, shadow markup, and behavior in a single, imported HTML file.

Major Arcana

My personal feeling is that the biggest barrier to effective Shadow DOM usage, in a lot of cases, is that many developers haven't learned about the browser as much as they've learned about React or another framework, and those frameworks have often diverged in philosophy from the DOM. If you're used to thinking of the page as a JSX function value, the idea of a secret, stateful document fragment that replaces the DOM you tried to render is probably pretty bizarre.

But as someone who writes a lot of minimalist code directly against browser APIs, I actually think Shadow DOM fits in well with my mental model of how elements work, and it has clarified a lot of my thinking on how to build effectively with custom elements — especially through slots and slotted elements.

I'm still learning and experimenting, but I feel comfortable saying that if you're building custom elements, the rule of thumb should be "use Shadow DOM, but not very much." The more you're able to expose HTML to the light DOM by surfacing it through slots, the easier it is to compose them and style content. For example, a custom element that creates a tabbed UI from its children is a great Shadow DOM use case: the tab list lives in the shadow and is generated implicitly by iterating over the slotted elements. Since the actual tab contents are placed back in the light DOM, they're still easy to style and inspect. To really go with the grain of the platform, the host component might show or hide those slotted blocks using the hidden attribute, instead of setting styles or adding classes.

The exception is for elements that should not have children (like input tags) or where children are used for configuration — think video tags or my old Leaflet map component. With these "leaf" components, Shadow DOM lets you treat inner HTML as a domain-specific language, while your visible content lives entirely in the shadow root. That's a great way to create customized behavior, but expose it to designers or novice front-end developers who are very comfortable with markup but would balk at writing a lot of JS.

Ultimately, Shadow DOM feels like it really crystallizes the role of custom elements as a tool for implementing UI widgets, not as a competitor for Svelte. Indeed, by providing a mechanism for moving complex functionality into an opaque facade, it's probably the biggest gift to the "web pages are for documents, not apps" crowd in several years: if you want to build a big single page app, Shadow DOM doesn't really move the needle, but it's great for injecting discrete units of content into an article. As someone who crosses that app/document divide a lot, I'm really excited to see what I can do with it this year.

March 7, 2020

Call Me Al

With Super Tuesday wrapped up, I feel pretty confident in writing about Betty, the new ArchieML parser that powered NPR's new election liveblogs. Language parsers are a pretty fundamental computer science discipline, which of course means that I never formally learned about them. Betty isn't a very advanced parser, compared to something that can handle a real programming language, but it's still pretty neat — and you can't say it's not battle-tested, given the tens of thousands of concurrent readers who unknowingly consumed its output last week.

ArchieML is a markup language created at the New York Times a few years back. It's designed to be easy to learn, error-tolerant, and well-suited to simultaneous editing in Google Docs. I've used it for several bigger story projects, and like it well enough. There are some genuinely smart features in there, and on a slower development cycle, it's easy enough to hand-fix any document bugs that come up.

Unfortunately, in the context of the NPR liveblog system, which deploys updated content on a constant loop, the original ArchieML had some weaknesses that weren't immediately obvious. For example, its system for marking up multi-line strings — signalling them with an :end token — proved fragile in the face of reporters and editors who were typing as fast as they could into a shared document. ArchieML's key-value syntax is identical to common journalistic structures like Sanders: 1,000, which would accidentally turn what the reporter thought was an itemized list into unexpected new data fields and an empty post body. I was spending a lot of time writing document pre-processors using regular expressions to try to catch errors at the input level, instead of processing them at the data level, where it would make sense.

To fix these errors, I wanted to introduce a more explicit multi-line string syntax, as well as offer hooks for input validation and transformation (for example, a way to convert the default string values into native types during parsing). My original impulse was to patch the module offered by the Times to add these features, but it turned out to be more difficult than I'd thought:

- The parser used many, many regular expressions to process individual lines, which made it more difficult to "read" what it was doing at any given time, or to add to the parsing in an extensible way.

- It also used a lazy buffering strategy in a single pass, in which different values were added to the output only when the next key was encountered in the document, which made for short but extremely dense code.

- Finally, it relied on a lot of global scope and nested conditionals in a way that seemed dangerous. In the worst case you'd end up with a condition like !isSkipping && arrayElement.exec(input) && stackScope && stackScope.array && (stackScope.arrayType !== 'complex' && stackScope.arrayType !== 'freeform') && stackScope.flags.indexOf('+') < 0, which I did not particularly want to untangle.

Okay, I thought, how hard can it be to write my own parser? I was a fool. Four days later, I emerged from a trance state with Betty, which manages to pass all the original tests in the repo as well as some of my own for my new syntax. I'm also much more confident in our ability to maintain and patch Betty over time (the ArchieML module on NPM hasn't been updated since 2016).

Betty (who Wikipedia tells me was the mechanic in the comics, appropriately enough) is about twice as large as the original parser was. That size comes from the additional structure in its design: instead of a single pass through the text, Betty builds the final output from three escalating passes.

- The tokenizer runs through the document character by character, and outputs a stream of tokens containing one or more characters of text bucketed into different types.

- The parser takes that stream of tokens, reassembles them into lines (as is required by the ArchieML spec), and then matches those against syntax patterns to emit a list of assembly instructions (such as setting a key, creating an array, or buffering text).

- Finally, the assembler runs the instructions through a final cleanup (consolidating and trimming values) and then uses them to build the output object.

Essentially, Betty trades concision for clarity: during debugging, it was handy to be able to look at the intermediate outputs of each stage to see where something went wrong. Each pipeline section is also much more readable, since it only needs to be concerned with one stage of the process, so it uses less global state and does less bookkeeping. The parser, for example, doesn't need to worry about the current object scope or array types, but can simply defer those to the assembler.

But the real wins are the simplicity of adding new syntax to ArchieML, in ways that the original parser was not extensible. Our new multi-line type means that editors and reporters can write plain English in posts and not have to worry about colliding with the document syntax in unexpected ways. Switching to Betty cleaned up the liveblog code substantially, since we can also take advantage of the assembler's pipeline hooks: keys are automatically camel-cased (Google Docs likes to sentence-case keys if you're not careful), and values can be converted automatically to JavaScript Date objects or numbers at the earliest stage, rather than during output or templating.

If you'd told me a few years ago that I'd be writing something this complicated, I would have been extremely surprised. I don't have a formal CS background, nor did I ever want one. Parsing is often seen as black magic by self-taught developers. But as I have argued in the past, being able to write even simple parsers is an incredibly valuable skill for data journalism, where odd or proprietary data formats are not uncommon. I hope Betty will not just be a useful library for my work at NPR, but also a valuable teaching tool in the community.

November 20, 2019

Repackaged apps

Earlier this week, a member of the Google developer relations team ported Caret to the web. He's actually the second person from Chrome to do this — a member of the browser team created a separate port last month. The reasons for this are simple: Caret is a complete application with a relatively small API surface, most of which revolves around file I/O. Chrome has recently rolled out trial support for the Native Filesystem API, which lets web apps open and edit local files. So it's an ideal test case.

I want to be clear, Google's not doing anything wrong here. Caret is licensed under the GPL, which means pretty much anyone can take it and do whatever they want, as long as they give me credit for the code I wrote and distribute the source, both of which are happening here. They haven't been rude about it (Ben, the earlier developer, very kindly reached out to me first), and even if they were, I couldn't stop it. I intentionally made that decision early on with Caret, because I believe giving the code away for something as fundamental as a text editor is the right thing to do.

That said, my feelings about these ports are extremely mixed.

On the one hand, after a half-decade of semi-active development, Caret has found a nice audience among students and amateur hackers. If it's possible to expand that audience — to use Google's market power to give more students, and more amateurs, the tools to realize their own goals — that's an exciting possibility.

But let's be clear: the reason why a port is necessary is because Google has been slowly removing support for Chrome Apps like Caret from their browser, in favor of active development on progressive web apps. After building on their platform and watching them strip support away from my users on Windows and OS X, with the clear intention of eventually removing it from Chrome OS after its Android support is advanced enough, I'm not particularly thrilled about the idea of using it to push PR for new APIs in Chrome (no other browsers have announced support for Native Filesystem).

People have ported Caret before. But it feels very different when it's a random person who wants to add a particular feature, versus a giant tech corporation with a tremendous amount of power and influence. If Google wants to become the new "owner" of Caret, they're perfectly capable of it. And there's nothing I can do to stop them. Whether they're going to do this or not (I'm pretty sure they won't) doesn't stop my heart from skipping a beat when I think about it. The power gradient here is unsettling.

Lately, a group of journalism students at Northwestern University here in Illinois came under fire for an apology for and retraction of their coverage of protests against former Attorney General Jeff Sessions. This includes the usual suspects, like Bari Weiss, the NYT columnist who regularly publishes columns in the biggest paper in the world about how she's being silenced by critics, but also a number of legitimate journalists concerned about self-censorship. But the editorial itself is quite clear on why they took this step, including one telling paragraph:

We also wanted to explain our choice to remove the name of a protester initially quoted in our article on the protest. Any information The Daily provides about the protest can be used against the participating students — while some universities grant amnesty to student protesters, Northwestern does not. We did not want to play a role in any disciplinary action that could be taken by the University. Some students have also faced threats for being sources in articles published by other outlets. When the source in our article requested their name be removed, we chose to respect the student’s concerns for their privacy and safety. As a campus newspaper covering a student body that can be very easily and directly hurt by the University, we must operate differently than a professional publication in these circumstances.

You may disagree with the idea that journalists should take down or adjust coverage of public events and persons, but it is legitimately more complicated than just "liberal snowflakes bowing to public pressure." No-one is debating that the reporters can take pictures of public protests, or publish the names of those involved. But should they? Likewise, when a newsroom's community is upset about coverage, editors can ignore the outcry, or respond with scorn. It shouldn't be surprising that certain audiences turn away or become distrustful of a paper that does so.

The relationships in this situation, as with various ports of Caret, are complicated by power. In both cases, what would be permissible or normal in one context is changed by the power differential of the parties involved, whether that's students to the paper to the university, or me to Google, or data journalists to the people in their FOIA requests, or tech workers to their employers' government contracts.

Most newsrooms don't think very much about power, in my experience, or they think of it as something they're supposed to check, not something they possess. But we need to take responsibility for our own power. It's possible that the students at the Daily Northwestern overreacted — if you protest in public, you should probably expect that pictures are going to be taken — but they're at least engaging with the question of what to do with the power they wield (directly and, in the case of the university's discipline system, indirectly). Using power in ways that have a real chance of harming your readers, just on principle and the idea that "that's what journalists do," is tautocracy at work.

As much as anything, I think this is one of the key generational shifts taking place in both software and journalism. My own sympathies tend toward a vision of both that prioritizes harm reduction over abstractions like "free speech" or "intellectual property," but I don't have any pat answers. Similarly, I've become acclimated to the idea of a web-based Caret port that's out of my hands, because I think the benefits to users outweigh the frustration I feel personally. I can't do anything about it now. But I will definitely learn from this experience, and it will change how I plan future projects.

May 21, 2019

Radios Hack

The past few months, I've mostly been writing in public for NPR's News Apps team blog, with posts on the new Dailygraphics rig (and setting it up on Windows), the Mueller report redactions, and building a scrolling audio story. However, in my personal time, I decided to listen to some podcasts. So naturally, I built a web-based listener app, just for me.

I had a few goals for Radio as I was building it. The first was my own personal use case, in which I wanted to track and listen to a few podcasts, but not actually install a dedicated player on my phone. A web app makes perfect sense for this kind of ephemeral use case, especially since I'm not ever really offline anymore. I also wanted to try building something entirely using Web Components instead of a UI framework, and to use modern features like import — in part because I wanted to see if I could recommend it as a standard workflow for younger developers, and for internal newsroom tools.

Was it a success? Well, I've been using it to listen to Waypoint and Says Who for the last couple of months, so I'd say on that metric it proved itself. And I certainly learned a lot. There are definitely parts of this experience that I can whole-heartedly recommend — importing JavaScript modules instead of using a bundler is an amazing experience, and is the right kind of tradeoff for the health of the open web. It's slower (equivalent to dynamic AMD imports) but fast enough for most applications, and it lets many projects opt entirely out of beginner-unfriendly tooling.

Not everything was as smooth. I'm on record for years as a huge fan of Web Components, particularly custom elements. And for an application of this size, it was a reasonably good experience. I wrote a base class that automated some of the rough edges, like templating and synchronizing element attributes and properties. But for anything bigger or more complex, there are some cases where the platform feels lacking — or even sometimes actively hostile.

For example: in the modern, V1 spec for custom elements, they're not allowed to manipulate their own contents until they've been placed in the page. If you want to skip the extra bookkeeping that would require, you are allowed to create a shadow root in the constructor and put whatever HTML you want inside. It feels very much like this is the workflow you're supposed to use. But shadow DOM is harder to inspect at the moment (browser tools tend to leave it collapsed when inspecting the page), and it causes problems with events (which do not cross the shadow DOM boundary unless you alter your dispatch code).

There's also no equivalent in Web Components for the state management that's core to most modern frameworks. If you want to pass information down to child components from the parent, it either needs to be set through attributes (meaning you only get strings) or properties (more bookkeeping during the render step). I suspect if I were building something larger than this simple list-of-lists, I'd want to add something like Redux to manage data. This would tie my components to that framework, but it would substantially clean up the code.

Ironically, the biggest hassle in the development process was not from a new browser feature, but from a very old one: while it's very easy to create an audio tag and set its source to any sound clip on the web, actually getting the list of audio files is often impossible, thanks to CORS. Most podcasts do not publish their episode feeds with the cross-origin header set, so the browser's security settings shut down the AJAX requests completely. It's wild that in 2019, there's still no good way to make a secure request (say, one that transmits no cookies or custom headers) to another domain. I ended up running the final app on Glitch, which provides basic Node hosting, so that I could deploy a simple proxy for feed data.

For me, the neat thing about this project was how it brought back the feeling of hackability on the web, something I haven't really felt since I first built Caret years ago. It's so easy to get something spun up this way, and that's a huge incentive for creating little personal apps. I love being able to make an ugly little app for myself in only a few hours, instead of needing to evaluate between a bunch of native apps run by people I don't entirely trust. And I really appreciated the ways that Glitch made that easy to do, and emphasized that in its design. It helps that podcasting, so far, is still a platform built on open web tech: XML and MP3. More of this, please!

October 2, 2018

Generators: the best JS feature you're not using

People love to talk about "beautiful" code. There was a book written about it! And I think it's mostly crap. The vast majority of programming is not beautiful. It's plumbing: moving values from one place to another in response to button presses. I love indoor plumbing, but I don't particularly want to frame it and hang it in my living room.

That's not to say, though, that there isn't pleasant code, or that we can't make the plumbing as nice as possible. A program is, in many ways, a diagram for a machine that is also the machine itself — when we talk about "clean" or "elegant" code, we mean cases where those two things dovetail neatly, as if you sketched an idea and it just happened to do the work as a side effect.

In the last couple of years, JavaScript updates have given us a lot of new tools for writing code that's more expressive. Some of these have seen wide adoption (arrow functions, template strings), and deservedly so. But if you ask me, one of the most elegant and interesting new features is generators, and they've seen little to no adoption. They're the best JavaScript syntax that you're not using.

To see why generators are neat, we need to know about iterators, which were added to the

language in pieces over the years. An iterator is an object with a next() method,

which you can call to get a new result with either a value or a "done" flag. Initially, this

seems like a fairly silly convention — who wants to manually call a loop function over

and over, unwrapping its values each time? — but the goal is actually to enable new

syntax once the convention is in place. In this case, we get the generic for ...

of loop, which hides all the next() and result.done checks

behind a familiar-looking construct:

for (var item of iterator) {

// item comes from iterator and

// loops until the "done" flag is set

}

Designing iteration as a protocol of specific method/property names, similar to the way that Promises are signaled via the then() method, is something that's been used in languages like Python and Lua in the past. In fact, the new loop works very similarly to Python's iteration protocol, especially with the role of generators: while for ... of makes consuming iterators easier, generators make it easier to create them.

You create a generator by adding a * after the function keyword. Within the

generator, you can ouput a value using the yield keyword. This is kind of like a

return, but instead of exiting the function, it pauses it and allows it to resume

the next time it's called. This is easier to understand with an example than it is in text:

function* range(from, to) {

while (from <= to) {

yield from;

from += 1;

}

}

for (var num of range(3, 6)) {

// logs 3, 4, 5, 6

console.log(num);

}

Behind the scenes, the generator will create a function that, when called, produces an iterator. When the function reaches its end, it'll be "done" for the purposes of looping, but internally it can yield as many values as you want. The for ... of syntax will take care of calling next() for you, and the function starts up from where it was paused from the last yield.

Previously, in JavaScript, if we created a new collection object (like jQuery or D3 selections), we would probably have to add a method on it for doing iteration, like collection.forEach(). This new syntax means that instead of every collection creating its own looping method (that can't be interrupted and requires a new function scope), there's a standard construct that everyone can use. More importantly, you can use it to loop over abstract things that weren't previously loopable.

For example, let's take a problem that many data journalists deal with regularly: CSV. In

order to read a CSV, you probably need to get a file line by line. It's possible to split

the file and create a new array of strings, but what if we could just lazily request the

lines in a loop?

function* readLines(str) {

var buffer = "";

for (var c of str) {

if (c == "\n") {

yield buffer;

buffer = "";

} else {

buffer += c;

}

}

}

Reading input this way is much easier on memory, and it's much more expressive to think

about looping through lines directly versus creating an array of strings. But what's really

neat is that it also becomes composable. Let's say I wanted to read every other line from

the first five lines (this is a weird use case, but go with it). I might write the

following:

function* take(x, list) {

var i = 0;

for (var item of list) {

if (i == x) return;

yield item;

i++;

}

}

function* everyOther(list) {

var other = true;

for (var item of list) {

if (!other) continue;

other = !other;

yield item;

}

}

// get my weird subset

var lines = readLines(file);

var firstFive = take(5, lines);

var alternating = everyOther(firstFive);

for (var value of alternating) {

// ...

}

Not only are these generators chained, they're also lazy: until I hit my loop, they do no work, and they'll only read as much as they need to (in this case, only the first five lines are read). To me, this makes generators a really nice way to write library code, and it's surprising that it's seen so little uptake in the community (especially compared to streams, which they largely supplant).

So much of programming is just looping in different forms: processing delimited data line by line, shading polygons by pixel fragment, updating sets of HTML elements. But by baking fundamental tools for creating easy loops into the language, it's now easier to create pipelines of transformations that build on each other. It may still be plumbing, but you shouldn't have to think about it much — and that's as close to beautiful as most code needs to be.

September 16, 2017

Hacks and Hackers

Last month, I spoke at the first SeattleJS conference, and talked about how we've developed our tools and philosophy for the Seattle Times interactives team. The slides are available here, if you have trouble reading them on-screen.

I'm pretty happy with how this talk turned out. The first two-thirds were largely given, but the last section went through a lot of revision as I wrote and polished. It originally started as a meditation on training and junior developers, which is an important topic in both journalism and tech. Then it became a long rant about news organizations and native applications. But in the end, it came back to something more positive: how the web is important to journalism, and why I believe that both communities have a lot to gain from mutual education and protection.

In addition to my talk, there are a few others from Seattle JS that I really enjoyed:

- Ashley Williams: JavaScript close to the metal - a typically charming look at abstraction, operating systems, and the web platform

- Steve Kinney: Building musical isntruments with Web Audio - A really funny talk on building and playing a modular synthesizer

- Shagufta Gurmukhdas: Web-based VR - Although I'm still not convinced of the value of WebVR, I do love the way this talk shows how custom elements create a beginner-friendly language for new tech

November 7, 2016

Return values

This is a tale of two algorithms.

Every now and then, someone publishes a piece of crossover writing that blows me away. Last week, Claudia Lo at Rock Paper Shotgun examined the algorithms behind relationship simulation in the indie game Rimworld by decompiling its code:

The question we're asking is, 'what are the stories that RimWorld is already telling?' Yes, making a game is a lot of work, and maybe these numbers were just thrown in without too much thought as to how they'd influence the game. But what kind of system is being designed, that in order to 'just make it work', you wind up with a system where there will never be bisexual men? Or where all women, across the board, are eight times less likely to initiate romance?

I want to take a moment to admire Lo's writing, which takes a difficult technical subject (bias in object-oriented simulation code) and explains it in a way that's both readable and ultimately damning to its subject. It's a calm dissection of how stereotypes can get encoded (literally!) into entertainment, and even goes out of its way to be generous to the developer. I haven't talked much about games journalism in the last few years, but this is the kind of work I wish I'd thought to do when I was still writing.

In short, Lo notes that the code for Rimworld combines sexuality, gender, age, and emotional modeling in a way that's more than a little unsettling: women in the game are all bisexual and may be attracted to much older partners, while men have a narrower (and notably younger) attraction range. The game also models penalties for rejection, but not for being harassed, which leaves players trying to solve bizarre problems like "attractive lesbians that destroy community morale."

Part of what makes Lo's story interesting is how deeply this particular simulation reflects not just general social biases, but the particular biases of its developer. It took a lot more work to encode a complex, weighted system of asymmetrical attraction than it would to just use the same basic algorithm for everyone. Someone planned this very carefully — in fact, since the developer jumped into the comments on the piece with a pseudoscientific rant about the non-existence of bisexual men, we know that he's thought way too much about it. He's really invested in this view of "how people are," and very upset that anyone would think that it's just the slightest bit unrealistic or ignorant.

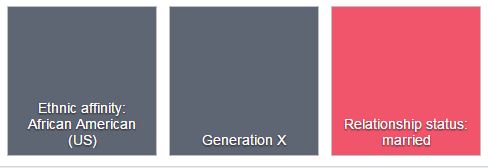

Keep that in mind when reading Pro Publica's investigation into Facebook ad preferences, in which advertisers can target ads toward (or against) particular "ethnic affinities" in violation of federal laws against discrimination in housing and other services. "Affinity" is Facebook's term, a way of hiding behind "we're not actually discriminating on race." Even for the blinkered Silicon Valley, it's astonishing that nobody thought about the ways that this could be misapplied.

The existence of these affinities may be confusing, since Facebook never actually asks about your ethnicity when setting up a profile. Instead, it generates this metadata based on some kind of machine-learning process as you use the service. As is typical for Facebook, this is not only a very bad idea, it's also very badly implemented. I know this myself: Facebook thinks I have an ethnic affinity of "African-American (US)," probably because my friend group via Urban Artistry is largely black, even though I am one of the whitest people in America (there doesn't seem to be an "Ethnic Affinity: White" tag, maybe because that's considered the default setting).

Both Facebook and Rimworld are examples of programming real-world bias into technology, but of the two it's probably Facebook that bothers me more. For the game, a developer very intentionally designed this system, and is clear about his reasons for doing so. But in Facebook's case, this behavior is partially emergent, which gives it deniability. There's no source code that we can decompile, either because it's hidden away on Facebook's servers or because it's the output of a long, machine-generated process and even Facebook can't directly "read" it. The only way to discover these problems is indirectly — such as how some news organizations are trying to collect and analyze Facebook's political ads via user reporting tools.

As more and more of the world becomes data-driven, it's easy to see how emergent behavior could reinforce existing biases if not carefully checked and guarded. When the behavior in question is Pokemon Go redlining, maybe it's not such a big deal, but when it becomes housing — or job opportunities, or access/pricing for services — the effects will be far more serious. Class action lawsuits are a reasonable start, but I think it may be time to consider stronger regulation on what personal data companies can maintain and utilize (granted, I don't have any good answers as to what that regulation would look like). After all, it's pretty clear that tech won't regulate itself.

Addendum: on journalism and public policy

I'm posting this the night before Election Day in the USA. Like a lot of newsrooms, The Seattle Times has a strict non-partisan policy for its journalists, so I can tell you to vote (please do! it's important!), but I can't tell you how I think you should vote or how I voted, and I'm discouraged from taking part in public activities that would signal bias, such as caucusing for either party. It's a condition of my employment at the paper.

Here's what I think is funny: in the post above, I lay out a strong but (I think) reasoned argument for public policy around the use of personal data. While I'm not particularly well-sourced compared to the journalists at Pro Publica, I am one of the few people at the Times who can speak knowledgeably about how databases are stored and interact. I can make this argument to you, and feel like it carries some authority behind it.

But I can only make that argument because our political parties do not (yet) have any coherent preference on how personal data should be treated. If, at some point, the use of personal data was to become politicized by one side or another, I'd no longer be free to share this argument with you — the merits of my position wouldn't have changed, nor would my expertise, but I'd no longer be considered "objective" or "unbiased."

The idea that reporters do not have opinions, or can only have opinions as long as the subjects don't matter, seems self-evidently silly to me. Data privacy is a useful thought experiment: in this case, I'm safe as long as the government never gets its act together. If that seems like a terrible way to run the fourth estate — indeed, if this sounds like a recipe for the proliferation of fact-free publishing outlets encouraged by the malicious inattention of Facebook — I can't disagree. But to tie this to my original point, however this election turns out, I think a lot of journalists are going to need to ask themselves some hard questions about the value of "objectivity" in an algorithm-powered media universe.