February 5, 2016

Catch-up: 2015

The last thing I'd written about here was the paper's investigation into police shootings, so let's take this chance to wander through the rest of 2015.

In October, after a Seattle dentist shot Cecil the lion and made himself temporarily infamous, one of our reporters put in a records request for all historical animal imports into the USA. The resulting story involved querying through seven and a half million rows of data to find out what we import, and how Paul Allen's Initiative 1401 (which banned the resale of several species of animal trophies) would affect these imports (answer: hardly at all). We also got to do some fun visualizations for it.

In November, my teammate Audrey worked with the Seattle Sketcher to create a voiced history of Ravensdale, a boomtown destroyed after a mining accident. In general, audio slideshows aren't hugely successful online, but I think this one was a really pleasant experience, and analytics indicate that a lot of people listened to it.

Every year, during the Seahawks season, the paper does a series of "paper hawks" — foldable paper dolls for players on the team. The last one is blank, so people can put in their own faces. To make things interesting, I put together a paper hawk web app that could use a camera to take a picture of the reader, and do all the customization in the page (including changing skin tones and hair color), then print it out. This was interesting project in part because the API I used (getUserMedia) is restricted to HTTPS only in Chrome. To make it work, we moved all of our projects to secure domains, which was a great test case for encrypting additional content at the paper.

For MLK Day, my team revived the Seattle Times' tribute to the great man, which was originally published twenty years ago (and had been last updated in 2011). The new version is responsive and easier to update, so that each year we can add more information to it. It's fitting, of course, that the paper has a page just for Dr. King, since they were a major part of the campaign to rename King County in his honor back in 1995. It's pretty cool to keep that tradition going.

Finally, just this week, we published a Pacific NW Magazine story on modern dating, with an interactive "mini-documentary" that I built with our video team. Based on your answers, it generates a custom playlist from the interviews that we recorded. We were inspired by this great piece done by the Washington Post on "the N word." I really enjoyed putting the interactions and animation together, but honestly, most of the credit goes to our video team, and my work was just the window dressing.

These are just the major interactives, of course. All told, we built 84 projects of all sizes last year, not including various small pages built by the producers using our app template. That's a pretty good rate of production for a two-developer team. Here's to a busy 2016!

January 21, 2016

Unconferencing

How do we level up data journalists? In a few months, we'll have a new digital/data intern at the Times, and so I've been asking myself this question quite a bit, especially in light of our team's efforts to recruit diverse candidates. There are a lot of students and young journalists out there with a little bit of training, but no idea where to go from there: how do we get them across the gap to where they're capable of working on a newsroom development team? There's a catch-22 at work here: it's especially tough for aspiring news devs to get a job without experience, but they can't get experience without the job.

One strategy I've often heard is that young people should attend industry conferences as a way to learn from experienced journalists and build connections. Myself, I'm skeptical of this. Conferences have never really been a part of my professional life. We didn't go to them at CQ, and I never got a chance to go to GDC when I worked in the game industry. After I was hired at the paper, I got to go to SND2015 and Write the Docs, and this year I'm heading to NICAR, SRCCON, and (possibly) CascadiaJS. It's possible I really hate myself.

Visiting conferences is rewarding, but it's also exhausting, expensive, and a huge time-sink. And while host organizations often work to mitigate that through scholarships and grants to disadvantaged communities, it's still a big ask for neophytes. Even if I weren't skeptical of the benefits conferences actually bring, I think it's hard to argue that we don't need better, more accessible solutions.

The way I see it, there are three things that you get out of a conference as a young person:

- Mentorship

- Training

- Exposure to developing industry trends

Of the three, the first is the hardest to duplicate, and yet it's the most crucial. Networks are powerful in this industry, and you can practically watch them develop before your eyes if you look closely: young people who catch a break early with the right people, and find themselves quickly elevated with opportunities to work on well-known teams, fill industry panels, and write insipid Nieman Lab think-pieces on the future of news. Then we all end up competing over hiring those same six people, which I don't really think is healthy.

Ironically, this is something I want to discuss with other newsrooms at the conferences this year, before I retreat into my Seattle cave for the rest of my natural life. But I'm also starting a personal initiative to make myself available for "remote mentorship," and asking other people to do so. If you're in news and would like to join, feel free to add yourself to the sheet, and I'll share it with students or other people who get in touch!

December 28, 2015

Let's not

Right now you can access my portfolio over a secure, encrypted connection, thanks to Let's Encrypt. Which is pretty cool! On the other hand, if nginx restarts this week, it'll probably crash on a bad config value, temporarily disabling all my public-facing websites. This has been emblematic of my HTTPS experience in general: a mix of triumphs and severe configuration mishaps.

A little background: in order to serve a website over a secure connection, you need a digital certificate to encrypt communication with the browser. You can generate these certificates yourself, but that's really only good for personal use. The self-signed cert has to be manually installed on each machine that accesses the server, otherwise the browser will throw up a big, ugly warning screen. The alternative is to buy a certificate from a "trusted authority," most of which are not particularly trustworth or authoritative, but it'll get you a green lock icon in the URL bar. Purchased certs tend to be either expensive or a hassle or both.

After the Snowden leaks, there was a lot of interest in encrypting all web traffic, which meant bypassing the existing certificate authority protection racket run by Symantec et al. Mozilla and some other organizations got together and started Let's Encrypt, with the goal of making trusted certificates free and easy. I figure they're halfway there: I didn't pay anyone for the cert, at least.

There's an official client for the service, but it only works for Apache and it's kind of hefty. My server is set up in an unsupported (but still pretty standard) configuration: I run nginx as a forward proxy in front of Apache (for PHP scripts) and Node (for various apps, including Weir), both of which I'd like to be secured. So I used acme-tiny instead, which basically just talks to the cert API and is small enough that I could read and understand the whole thing. I wrote a shell script to wrap it up and automate things. Automation is important, because unlike paid certificates, these are only good for 90 days, so you need a cron job set to run every month or so to renew them.

Setting all this up wasn't an easy process. The acme-tiny script is well-written, but it has bugs on the version of Python that comes with CentOS. Then I had to set up nginx to use the certificates manually. My webmail got locked into an infinite redirect once I moved my self-signed cert out from Apache and out to the proxy. And the restart crash? Turns out that Let's Encrypt is rate-limited on a per-domain basis, and I didn't back up the current certificate before I hit the rate limit, so my update script overwrote it with an empty version. Luckily, nginx caches certs and won't restart if it detects a bad config, so I'm safe as long as it can outlast the seven-day rate-limit window (it probably will: it's been up 333 days so far, after all).

Without literally years of server admin experience, I'm not sure I would have made it through these issues. And as I mentioned, my system is pretty standard — there's no load-balancer, no CDN, and I don't need to host third-party content. I also don't have any business that gets lost if anything is busted and the certificate expires in March. If I were, say, an IT department responsible for a high-traffic site, I'd be a lot more cautious about moving everything over to HTTPS, either through Let's Encrypt or a paid option.

Ultimately, the news industry and other sites are going to have to follow the lead of the Washington Post, even if the timeframe takes a while. Even apart from the security benefits it carries, browsers have locked new features (Service Worker, for example) behind HTTPS, and are moving old features behind it as well (geolocation is going to be the biggest disruption there). If you want to develop fast websites in the future (assuming that's something news product management cares about, which is... questionable), and especially if you want to create rich news applications, you're going to have to be encrypted.

In my case, I wanted to get a head start on developing with new browser features (a Service Worker would clean up a lot of Weir code), so it's worth the hassle. And we will continue to push these boundaries on the Seattle Times interactives team, since we've moved our S3 hosting to HTTPS (the rest of the site will follow eventually).

But I think there's a lot of tension between where we want to be, as a news industry, and where it's possible for us to be right now. Although I've seen people calling for incentives to change it (such as requiring HTTPS for news grants), the truth is that it just isn't that simple. News sites are often built in a baroque, overcomplicated set of layers — the Seattle Times, for example, currently sits behind a CDN, several instances of Varnish, some reverse proxies, and a load balancer, mostly due to a lot of historical baggage. Changing this to run securely is going to be a big process, even for a company of our size (maybe because of our size). I can't imagine the hassle for local papers that might have little or no IT support. It won't happen overnight, and Let's Encrypt hasn't done anything to change that yet.

In the meantime, I think it's worth stepping back and asking what we really want out of a digital news industry, because sometimes it's hard to maintain perspective from in the trenches. Is it important that readers be able to see our sites securely, free from worries that third parties are snooping or altering what they see? Sure, that's important. Is it in the top three things that Americans need from local news, above problems like "a sustainable revenue model" and "a CMS that doesn't actively fight against the newsroom?" Probably not. Given a choice between a cryptographically-secure media and a diverse, sustainably-funded media, I'm personally going to take the latter every time.

December 22, 2015

Post-SCC Plans

Last Tuesday was my last day at Seattle Central College, and I turned in my grades over the weekend. If nothing else, this leaves me with 10-20 free hours a week. And while I'll no doubt spend much of that time watching movies, practicing my dance moves, or catching up on my Steam backlog, I do have some projects that I want to finally start (or restart) in my spare time.

- Rebuild Grue: back when I was leaving Big Fish Games, I spent a couple of weeks working on a text adventure framework called Grue. The main goal of it was to make constructing interactive fiction in JavaScript as easy as possible. I think it was reasonably successful: a sample world is surprisingly readable and intuitive. It also got a bunch of things wrong (weird inheritance system, poor module setup). I'm planning on rebooting Grue in 2016, using ES2015 and a Node-compatible interface.

- Upgrade Caret's find/replace functionality: A few months ago, in one of my rare open source success stories, a contributor added project-wide search to Caret — a much-requested feature for years now. Unfortunately, we're still stuck with the default Ace dialog for find/replace within a single file. This year, I want to pull that out and re-implement it as a Caret UI widget, which (among other things) will fix a number of regular-expression bugs.

- Play music: Bass took a backseat to breaking when I started dancing a few years ago. These days, my fingers are noticeably slower on the strings than the used to be, which seems like a shame since I bought a really nice bass before we moved to Seattle. If I can find a laid-back open mike, I might resuscitate Four String Riot for a session or two.

- Break the web: In a recent project at the paper, I started using the getUserMedia API to access the built-in camera from a web app. It turns out this is, apart from some weirdness and the need to polyfill, pretty great: you don't need native code to access the camera (of course it's not in Safari). Now I want to do some additional mini-apps that use other future-forward web APIs, like Service Worker and gamepads.

- Write another book/article series: I've gotten a lot of really good feedback on JavaScript for the Web Savvy, both from inside my classes and by random readers around the Internet. Now that I have some time, I'd like to write another book, probably this time packaging up some of the lessons I've learned working in data journalism. I also want to pitch an article series that helps get people from the basics of web production up to more serious news app development.

- Hacks/Hackers meetup: Finally, last year I took over the local Hacks/Hackers meetup group, but I've been too busy to organize anything for it. Now that I have the time to round people up and gather resources, I want to make good on my goal of holding a day-long event one weekend — either as a hack day or a training session of some kind. More details as I figure it out!

December 7, 2015

How We're Fast

Over the Thanksgiving holiday, when I wasn't busily digesting as much cornbread stuffing as I could eat, I spent some time running WebPageTest against various projects that the Seattle Times Interactive team has built. The news industry as a whole may not care about speed, but I do, and I want our pages as fast as possible — especially the ones that are embedded in the regular CMS via responsive frames.

After all the testing, I'm generally pleased by how our stuff stacks up, especially when compared against the rest of the site. We have some advantages, of course: our pages typically have fewer ads, and we can strip down the page for maximum efficiency. But it's also the result of a lot of hard work on our news app template, ensuring that every project comes with smart decisions built in. I genuinely think that all news pages could be this fast, so it's worth talking about how we've made it happen, especially for other news organizations that use a similar flat-file approach to their interactives.

Browserify with care

We use Browserify to package up our JavaScript, because we're not savages, and you need some sort of module system for JavaScript these days. Browserify builds all our scripts into a single file, which is important for high-latency connections (which means most cellular networks, even on 4G). We also make sure to load that bundle file with the async attribute at the bottom of the page, so that it won't block rendering.

All of that is pretty standard best practice, but we've also learned that Browserify can be dangerous if you're not careful. A lot of NPM modules are published with the unminified, debug version of the library as the default export from the module. Angular in particular is bad about this: running require("angular") on its own will load a file filled with comments and documentation, totalling more than a megabyte in size (even after gzip, it's still more than 200KB). That's huge!

As a result, one of our production checklist items is to make sure that we are loading the minified version of any external libraries. We also use the browser property in our package.json file to alias common libraries to their minified versions, so that when we require Angular, jQuery, or Leaflet, it automatically defaults to the smallest file.

Gzip on S3

Like a lot of newsroom developers, my team hosts files on Amazon S3, mostly because it's cheap and reliable. People like to think about S3 as though it's just a normal, heirarchical flat-file server, like Apache or Nginx, but it's not. S3 is really a key-value store: you put in a path, and it spits back a prerecorded reply, including the headers.

If you think of S3 as a server, you'll expect it to do a bunch of things that it doesn't actually do. For example, it doesn't set a cache expiration date, and it doesn't know about content types. It also doesn't understand about Gzip compression, so it'll merrily serve your files in their uncompressed form, making them way bigger than they need to be, even if the browser requests the compressed version.

We get around this by running a compression stage on any text-based file during deployment, and setting the headers for the stored object to match. This does mean that theoretically, a browser that doesn't support Gzip will be unable to request that content, because S3 will always respond with compressed content no matter what Accepts-Encoding header the browser sends. Luckily, every browser since IE4 supports it.

Reduce framework code

I love Angular. If you want to quickly generate a visualization with powerful tools for filtering and data binding, you can't do much better. I personally think it's an order of magnitude better than D3. But Angular can also be brutally slow: its change detection algorithm requires a lot of time and memory as a tradeoff for developer convenience.

On a recent project that looked at animal imports, we started with Angular as a way to test out the visualization, but soon noticed that it was taking three or four seconds just to parse and apply the data. On a desktop, that time is a drag. On mobile, it's likely to get the tab terminated, or convince readers that there's something wrong with it.

When the profiler says that you're spending that much time in JavaScript, there are two options. The first is to try to find ways to work around the framework, which can range from unpleasant to actively painful. The second is to just rewrite in vanilla JS. It sounds more difficult to do the rewrite, but if all you're doing is data-binding and events, you can usually replace it pretty easily with a little templating and some custom data attributes. The resulting code isn't as clean or simple, but in the case of the animal imports, it dropped our JS execution time to under 100ms. That's fast.

Even jQuery can be optional these days. Because we compile ES6 down with Babel, a lot of DOM code that would be ungainly can become elegant. Template strings and arrow functions alone have allowed us to cut out DOM libraries entirely, and as a result many of our interactives consist of no external libraries at all. If you haven't checked into the advantages of using Babel in your build process, it's well worth another look.

Reduce third-party code

The number one contributor to page load time is not written by journalists: it's the third-party ad code that runs on the page. There may be only so much you can do about this, since it pays the bills, and of course it may not even apply on embedded graphics. But on our standalone pages, I've taken a strong stance on implementing all code ourselves whenever possible. For example, although our commenting system usually requires multiple scripts loaded synchronously, I wrote a loader that runs through and adds them asynchronously, and only after a user clicks on the "view comments" banner. We can't avoid the hit, but we can delay it until well after the rest of the page has had a chance to render.

Lazy-load everything

Once you've delayed scripts with the async attribute, trimmed the size of those scripts and compressed them, and deferred as much third-party code as you can, what's left over? In our case, this is where we start getting into the structure of the actual interactive, and how it loads itself. For most interactives, we embed data directly into the page, but beyond a certain size it becomes worthwhile to grab it via AJAX instead.

But there's another way to think about lazy-loading, and that's to consider what format you're actually using to populate the page. I'm as big a fan of progressive enhancement as anyone else, but in the case of my team, what we produce is interactive — there's literally no point if JavaScript is disabled. I've found that moving content into JSON and then templating it onto the page can reduce download times significantly, while the speed hit is negligible. Finding the balance between network speed and JavaScript execution time is a constant process for us.

When performance matters

Finally, a note of caution: as much fun as it is to squeeze every last millisecond out of the browser, I'm a little uncomfortable making it the alpha and omega of the job. Ultimately, our goal is to inform people — we'd like that to be fast, but a fast page with bad or misleading reporting is still a failure.

What I like about front-end speed is that it serves as a useful proxy for site quality. A site that's fast can't load too many ads. It can't serve too many tracking scripts. It has to put the reader first. It's easy, much of the time, to chip away at performance in the name of business metrics: loading an additional analytics script to get more information, or an obnoxious ad for a short-term revenue boost.

But if you put speed first, every decision has to start from the perspective of "what's good for the reader?" It's hard to measure the impact of good journalism, but we can have metrics for speed and other technical aspects of the presentation. We can spend more time on the former if we have strong, user-centric guidelines on the latter. If we want people to give us money over the long term, that seems like the only healthy strategy to me.

November 20, 2015

Weir, year two

I realized the other day that Google Reader shut down in June of 2013, which means I've been using Weir as my RSS reader for more than two years now. It's my longest-running software project, and still one of the most complex things I've built in Node. And apart from occasional revisions, it's been up and running constantly, in mobile and desktop browsers, that entire time.

I don't log out a lot of metrics from Weir, so there's a lot of stuff that I'm not tracking. But I can say that there are currently 113 subscriptions, with around 6,000 stories in the database. The server that hosts the app (as well as my various domains) downloads about 20GB of data each month, most of which is probably Weir (the rest is e-mail and server updates, and I'm frankly not that popular). It also hovers around 10% of available memory, which is pretty good for a garbage-collected language on a piddly little VM.

On the client side of things, the Angular code has definitely started to show its age. This was the project that I used to learn Angular, and since then I've learned a lot about the framework. Would I use it if I were writing Weir from scratch? I'm not sure. I still love the databinding aspect of Angular, but I suspect I could write a smaller, nimbler version of the UI in vanilla JavaScript pretty easily. At some point, I may give it a shot: the server API is clean enough that writing a new client should be relatively straightforward.

As an experiment in self-hosting a cloud service, Weir is a mixed success. But I have grown to love the way that something I wrote has become a fixture of my life. I clear out my stream on the bus in the morning. Throughout the day, Weir's purple tab icon lights up to let me know that new items are available. It feels like wearing clothes that I tailored for myself — using it feels a little nicer than it should, just from the pride in its construction.

November 4, 2015

Closing the textbook

Last week, when the administration sent out their quarterly "please someone cover these classes, we are very desparate" email, I put in my notice at Seattle Central College (how's that for irony?). I'll be finishing up this quarter teaching ITC 210, and then I'll need to find a new way to occupy 10-20 hours a week. For a start, I'm planning to volunteer for the local Girl Develop It organization as a TA. I'll be able to cook for Belle more often. And I'd like to be more active in managing the local Hacks/Hackers chapter that I took over earlier this year.

SCC does have some deep organizational problems, and I won't pretend they haven't influenced my decision to leave. But I don't regret the time I spent there: there's been little as rewarding as seeing people take the information I can give them and really run with it. Teaching has often pushed me to make sure that I knew every detail of a subject so that I wouldn't mislead students, and it's gotten me to explore new workflows and clarify my thinking on a lot of topics.

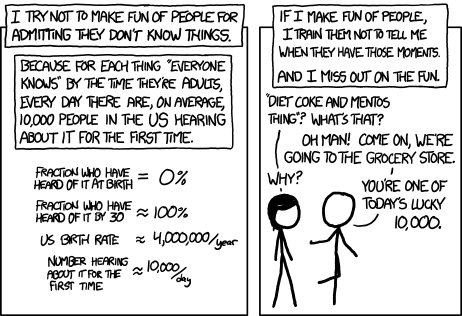

The most important thing I've learned isn't anything technical. Early on in my time as an instructor, I would often be surprised when students wouldn't know something basic, even though it might have been in the prerequisites (only later would I find out how porous those prereqs are at SCC). After a little while, I made a conscious decision that my reaction should be enthusiasm instead of surprise. Although I'm not a huge fan of XKCD, I was inspired by this comic:

Approaching ignorance not as a character flaw or personal failing, but as a chance to share something cool, was great for students. It provided a perspective from which basic questions can turn into an enthusiastic deep-dive into a topic — something even advanced students might find valuable. And it kept me engaged far longer than I think I could have managed in a curriculum where the opportunities to teach really high-level, interesting techniques just weren't there.

Although it seems a bit like pablum, and deeply out of character for a cynic like me, I actually believe that enthusiasm will continue to be useful, even when I'm not teaching regularly. After all, I work on the bleeding edge of an industry that's still struggling to figure out the Internet: the least I can do is be positive about it, for my sake if not for theirs.

October 14, 2015

AMPed up

This morning, you can read my opinions (plus three other newsroom developers) on AMP, Google's proposed ultra-fast publishing format. I'm the most optimistic of the the four, even though I wouldn't say that I'm enthusiastic. I think it's an interesting format, and possibly a kick in the pants for the business side of the industry.

In the last question of the interview, I talk a little bit about how I don't think site performance is a topic of actual discussion for product managers at news organizations, and as a result speed is still not a priority for them. What I didn't get in, but wish I had, is that I'm not sure they're wrong about that. Certainly, performance is important and third party code has run rampant on mobile pages. But is that really what's killing us?

I think it's worth remembering that this whole conversation started, in part, because Facebook decided that they want to be a publisher. Of course, nobody with a firm grasp on reality would think that handing full control of all their content over to Facebook is a good idea, so Zuckerberg's posse needed to create an incentive. Instant Articles ensued: in a burst of publicity, Facebook announced that the web was "slow" (with a lot of highly suspect numbers quantifying that slowness) and proposing their publication system as a way to speed it up.

Since in general we like nothing more than talking about how awful our industry is, journalists leapt to join in: why yes, now that you mention it, look how slow our sites are! Clearly, that's the problem (and not, say, the fact that Facebook holds our referral traffic hostage). It's the same reaction the industry has every time Apple releases a new device — cue exhaustive (and exhausting) ruminations on how to create compelling smartwatch content. Yuck.

This is not the first time that Facebook has created panic around the open web in order to make its social racket seem more appealing. In 2011, Anil Dash wrote his infamous post Facebook is gaslighting the web, documenting their practice of putting scary warnings on outgoing links while privileging their (short-lived) "seamless sharing" program. I think we should be careful about accepting their premises, even when they seem to jibe with the larger conversations around web technology.

Which brings us back to the question: should we care that news sites are slow?

My thought is that from a technical side, we should obviously care. Everyone on the web cares about speed. It has a proven effect on things like purchases and on-site time. It's an important metric, and one we should absolutely take seriously. But from a product standpoint, is it the most important thing? No. It's a Product X, and Product X will not save journalism (that post is from 2010, and sure enough, I think I've linked to it once a year since). It's easier to pitch a silver bullet than to admit the harder truth: that the key to our success is putting out journalism that is good enough that people will pay for it, one way or another.

It's possible, unfortunately, that there is no general-audience journalism good enough to make people pay for it anymore. And in that case, we are all doomed, with the possible exception of the NYT and whatever hipster media startups can get Comcast to cough up $200 million in funding. So it goes. But if we're going to be doomed, I'd rather be honest about why that is. It's not because we're slow. It's not because the ads are horrible. It's because our readers didn't think what we put out was important enough to pay for. That's enough of a tragedy on it's own.

October 1, 2015

Shielded by the law

This weekend, The Seattle Times released our investigation into Washington's "evil intent" law, which makes it almost impossible to prosecute police officers for the use of deadly force: Shielded by law. This was a great project to work on, and definitely an issue I'm proud we could bring to a wider audience. The source code for it is available here.

This project is on a page outside of our CMS entirely to support the outstanding trailer video that our photo department put together, which plays at the end of the animation if your device supports inline video (sorry, iOS). We didn't want to jump directly into the video, because A) it has sound, and B) many readers might find its visuals disturbing. Using our common dot motif to pull people in first, and then give them a choice of watching the video, seemed like a nice strategy. The animation is all done in the DOM and is mostly done just by adding classes on a timer — the only real time that JS touches individual elements is to randomize the fade-in for the dots. Loading the page without JavaScript shows the final image of the animation, via a handy no-js class that the scripts remove before starting playback.

One of our interesting experiments in this story was the use of embedded quiz questions, asking people to test their preconceived notions of police shootings. Originally we intended to scatter these throughout the story to grab readers' attention, but a section on the numerical results of the investigation ended up spoiling the answers. Instead, we moved them to a solid block before that section, and it's been well-received. The interactive graphics were actually also a relatively last-minute addition: originally, we were just going to re-run the print graphics, but exposing all the data in a responsive way was just too useful to pass up.

Probably the most technically advanced part of the page is the audio transcript from the 1985 state senate hearing on the law. As the audio plays, the transcript auto-advances and highlights the current line. It also displays a photo of the speaker from the hearing, to help readers get an idea of the players involved. Clicking on the transcript scrubs the audio to the correct spot. We don't do a lot of audio work here, unfortunately, but I think having an interface that's friendly to readers and listeners alike is a really nice touch, and something I do want to take advantage of on future projects. We built it to generate the data from standard subtitle files, so it should be easy to revisit.

Lastly, one of the most important parts of the story is the least flashy: the table in the "by the numbers" section for deadly force rates by race/ethnicity. We had worked for a while with this information presented the same as the other trivia questions, via clickable dots, but found that the part we really wanted to stress (the relative rates of death proportional to the general population) didn't stand out as much as we wanted. We brainstormed through a few different alternate visualizations, including stacked bars and nested pie charts, but in the end it was just clearest to build a table.

Like Rodney Dangerfield, they may get no respect, but a well-designed table can often be the simplest, easiest way to get a point across. The question then is, what's a well-designed table? Personally, I think there's a whole post in that question — how you order the columns, effective sorting/filtering, and how to add extra features (embedded sparklines, detail expansion, and tree views) that add information without confusing readers. One day, maybe I'll write it. But in the meantime, if you're working on a similar project and can't quite figure out how to present your information, there's no shame in using a table if it serves the story.

September 15, 2015

Value Ad

The math is even starker for smaller publications and individual bloggers, who rely more heavily on display advertising—and who have already been battered by shifts in the advertising market; some longtime professional bloggers, like Heather Armstrong, have given up writing their blogs full-time. The Awl's publisher Michael Macher told me that "the percentage of the network’s revenue that is blockable by adblocking technology hovers around seventy-five to eighty-five percent." Currently, readers use an ad blocker on around twenty-five percent of all pageviews. Nicole Cliffe, one of the founders of The Toast, said that "adblocker is brutal for us. And people always break out the 'Subscribers model! I donate twenty bucks a year!' thing but it doesn’t add up."

I'm finding myself thinking about adblocking a lot this week, and about publishing platforms. I spend a lot of time thinking about this in general, because I enjoy working for a Seattle newspaper and I would like it to still be here (in one form or another) fifteen years from now (at least), something which was never guaranteed but looks noticeably more tenuous these days. And the upcoming launch of easy, widespread mobile ad-block software is a big part of that.

Bad apples

You can't say that the ad industry has not done anything to deserve this, because of course they have. Online advertising has always been the place where incompetent programming and delusional management meet in a nexus of terrible. You're not a bad person if you work in ads, but you work for a bad business and in all seriousness I will help you go work somewhere else if you get in touch with me. Contact info is on the right.

The problems that advertising causes for web pages are well documented. Ads slow pages down. They're heavy and disruptive. They cause security risks and drive-by hacks. There is a strong argument that a lot of the (admittedly welcome) improvements in web programming technique comes from having to work around these issues: lazy-loading content, async scripts, module systems that can't be stomped by leaky ad code globals.

As a side note, in these discussions, one of the big elephants in the room is that Google (and Facebook, and Apple, and Twitter) are all ad companies. Which is true, but it's true in the way that we might say that insects are a good source of protein — you're still not going to sell me a grasshopper sandwich. Lumping Google in with the average fly-by-night agency may be technically correct, but anyone who has interacted with regular ad code will tell you that the two are miles apart. If Google were actually the people writing the ads you see on an average media site, we probably wouldn't be having this discussion.

Well, we might. Apple might still have decided to stick their thumb in Google's eye out of pure spite, because they're a nasty little gang of capitalists, and that's kind of what they do. But it doesn't matter, because the really smart people at Google aren't writing actual ads. They write very elegant, high-performing auction software that distributes other people's horrible, horrible code, thus undermining quite a bit of their moral high ground. It's a little hard to get mad at readers who want to run content blockers or Greasemonkey scripts or whatever. Of course you want to block these ads! Who wouldn't?

Disruption and its discontents

We have a bad habit in the news industry, which is that we have no faith in our ability to run a business, even though we speculate on it endlessly. Allison Hantschel has been writing posts like this for literally a decade now as a result. One word for the embrace of clear management-led self-sabotage is "trusting." Another word is "suckers."

Newsrooms are very good at grilling other organizations about their plans, and very bad at interrogating our own, in part because we're supposed to have a "wall" between the business and editorial sides of the enterprise. These days that wall is often porous, but the tradition is still there. So when the business half of a paper tells editors and reporters that running obnoxious ads are necessary, we don't often push back, even though we don't want to run them any more than readers want to see them.

This is an explanation, not an excuse. That said, it is inescapably true that the business models we chose, as an industry, are not proving to be as solid as they once were — and it is worth remembering that journalism really was (and in many cases, still is) wildly profitable. Craigslist killed off the classifieds, and content blockers will probably suck all the profit out of the banner-ad revenue stream. Ironically, the one strategy that's still surprisingly sound is printing the previous day's events into a complicated stack of folded paper and selling it for a buck or two. It's not a growth industry, but it seems to be relatively disruption-proof so far. Nobody seems very clear on how to take that model online, though, except by digitizing old people a la Kurzweil and counting on them to pay for content (probably a long shot).

The thing about Silicon Valley's lust for disruption is that, absent of any principles other than a libertarian belief in market power, it tends to just recentralize or recreate the pre-disruption problems. So instead of having a corrupt taxi bureacracy, now we have a corrupt Uber oligarchy, where half the cars you see in the app are fake and they're probably selling your ride history to data merchants in Russia for pennies on the dollar. You don't have to like the taxi system to think that this is kind of a bum deal. Similarly, you don't have to be a fan of advertising, or of advertising-supported journalism, to think that the inevitable outcomes of blocking display will range from bad to worse.

Personally, I think it's healthy to feel wildly uneasy with this entire dynamic, in which tech companies decide to target one bad actor and inflict collatoral damage on an entire industry with a nonchalant wave of their hand. I think it's normal to believe that publishers are getting what they deserve for decades of bad management, and still feel like wiping them out is overkill. It's reasonable to think we should have control over the experience as users, while also arguing that media companies need to pay the bills somehow. But then, I'm not exactly disinterested, myself.

Brought to you by everybody, and nobody

I have a post that's been incubating for about two months now, about riot grrrl and open source. I started thinking about it when I watched The Punk Singer, a shockingly-good documentary about Bikini Kill frontwoman Kathleen Hanna. And the story of the whole movement that she founded (along with a number of other influential women) is fascinating, because it's based on an entire ethic of self-publication and self-determination. They didn't like the commercial media that they had, so they made new media of their own and taught people how to do the same. To me, that's how open source should feel: undermining centralized power and giving the means of production back to the people.

But there's another way of looking at that, which is to say that riot grrrl zines never changed much of anything and the old open web got lost in the shuffle. We can romanticize both of them as much as we want, but at the end of the day they weren't capable of surviving against moneyed interests, and no amount of self-mythologizing is going to change that. That doesn't mean we should give up, but we need to be realistic about the gap between "should be" and "is," because we're in the middle of it now: readers should pay for journalism; they actively don't want to do so.

Our grim meathook media future

Here's one difficult truth: if you are a reporter, editor, or other news human in the year of our lord 2015, your fate is almost certainly on the web. The New York Times and the hot youth flavor of the day (Vox, Vice, Buzzfeed) may get invited into Instant Articles or Apple News, but everyone else is on their own. App-only publications have been tried, and failed, even with the force of Rupert Murdoch behind them. That leaves the web as the place where a diverse, free press can exist, especially once those print revenues finally dry up.

Here's another: the web is always going to grapple with hostile ads, because it's a platform built on remixing and embedding third-party content. The same things that let advertisers abuse your mobile connection also allow us to host comments via Disqus, or embed media from Twitter or Youtube, or create neat interactive features. Open platforms are messier, which is part of why they grow so effectively, and also why they have a hard time competing with closed, curated platforms. Nobody's going to make it easy for us.

Between those two difficult truths is a spectrum of uncomfortable options, ranging from paywalls to subscriptions to (most likely) bankruptcy. As Casey Johnston says in the Awl piece that opens this post, the likely outcome is the rapid eradication of many sites that currently scrape by on Doubleclick revenue. The small and the quirky are going to take the hit here, even if they're not so small: The Dissolve was shuttered earlier this year, despite a pretty impressive stable of contributors and support, and they won't be the last.

In the very long term, we all die alone. I hesitate to make any other predictions. But I suspect that the eventual fallout of these changes is the hollowing-out of the American media: two big national papers at the top; a horde of niche publications clinging, white-knuckled, to subsistence at the bottom; and not very much in the middle except the non-profits who have opted out of the entire rat race. That this arrangement parallels our national economic inequality is probably not a coincidence, but we're long past the point where anyone wants to hear a systemic critique. Will your favorite publication survive? It's time to spin the wheel and find out.