February 23, 2012

JavaScript as a Second Language

In December, jQuery creator John Resig (who is now working as a coder for self-education startup Khan Academy) wrote a post about teaching JavaScript as a first programming language. I'm a big fan of JavaScript, and by that point I knew I was going to be teaching it to all-new programming students, so I read it with interest. As Resig notes, there's a lot going for JavaScript as a starter language, mainly in the sense that it is ubiquitous, in high demand from employers, and easy to share.

But after spending some time teaching it, I'm not sure that JavaScript is actually a good language for first-time programmers at all--and it has little to do with the caveats that Resig raises, like its falsy values or block scoping. Those are tricky for people who have previous programming experience, because they don't work the way that other languages do. But students who have never programmed before have more fundamental problems that they're trying to wrap their heads around, and JavaScript (in the browser, at least) adds unnecessary complications to the process. My students so far have had the most trouble with:

- Loops - JavaScript has two kinds, one for objects and one for arrays, and they're both confusing for beginners.

- Input - apart from prompt(), there's no standard input. Learning to write "real" inputs means dealing with event listeners, which are complicated.

- Output - There's document.write(), or alert(), or console.log()... or the DOM. I don't know which is worse.

- The difference between defining a function and running a function - including figuring out what arguments mean, and why we use them.

And don't get me started on the DOM. As a complex, tree-shaped data structure, it's already tough enough to teach students how to traverse and alter it. When you start getting into the common ways that JavaScript interacts with data stored in HTML and CSS, even in relatively simple ways, it becomes extremely confusing for people who have never tried to write a program before--particularly if they're not very experienced with HTML/CSS in the first place. Now they're effectively learning three languages, each with its own quirks, just to do basic things.

This quarter I taught Intro to Programming using JavaScript. I built a simple scratchpad for students to use, so they wouldn't have to install any browser tools or understand HTML/CSS, but the result felt clunky--a lot of work to monkey-patch flaws in the language for the purposes of learning. Next quarter, while there's little I can do about all-new programmers who jump right into Intro to JavaScript, I do plan on using Processing for Intro to Programming itself. While the language syntax is similar to JavaScript, the all-in-one nature of the Processing environment--an editor with a built-in, always-visible console and a big "play" button for running the current sketch--means that I can spend my time teaching the fundamentals of coding, instead of explaining (or hiding) the idiosyncrasies of scripting in a browser.

February 13, 2012

Red Letter Day

Ah, budget day: the most annoying day of a data journalist's year. Even now that I'm no longer covering Congress, it still bugs me a little--except now, instead of being frustrated by the problem of finding stories, I'm just annoyed by the coverage itself. Few serious policy documents create so much noise from so little data.

For those who are unaware, on the night before budget day, each senator or representative places a constituent's tooth under their pillow before going to bed. While they're asleep, dreaming of filibusters and fundraising, the White House Chief of Staff creeps into their bedrooms and takes the tooth away, leaving a gift in return. Oh, the cries of joy when the little congresscritters wake to find a thick trio of paperback budget documents waiting for them!

Casting the budget as a fairy tale isn't as snarky as it might seem, because the president's budget is almost entirely wishful thinking. The executive branch, after all, does not control the purse strings of government--that power lies with the legislature. The budget is valuable in that it sets an agenda and expresses priorities, but any numbers in it are a total pipe dream until the appropriations process finishes. And if you want an example of how increasingly dysfunctional Congress has become, look no further than appropriations.

Although money for the next fiscal year is supposed to be allocated into appropriations bills by October 1st (the start of the federal fiscal year), they are increasingly late, often months late. In the meantime, Congress passes what are called "continuing resolutions"--stopgap measures that fund the government at (usually) reduced levels until real funding is passed. You can actually see the delays getting worse in a couple of graphics that my team put together at CQ: first, the number of "bill days" delayed since 1983, and then the number of "bill months" delayed by committee since 1990. Needless to say, this probably isn't helping the federal government run at its most efficient.

The connection between the president's budget and the resulting sausage is therefore tenuous at best (don't even get me started on tracking funds through appropriations itself). Even worse, from the perspective of a data-oriented reporter, is that the numbers in the budget are not static. They are revised multiple times by the White House in the months after release--and not only are they revised, they are often revised retroactively as new economic data comes in and the numbers must be adjusted to fit the actual policy environment. So even if we could talk about budget numbers as though they were "real money," the question remains: which budget numbers? And from when?

During my first couple of years at CQ, around January I would sit down with the Budget Tracker team and the economics editor, and propose a whole series of cool interactive features for budget season. And each time, they would politely and carefully explain all these caveats, which collectively added up to: we could talk about the budget in print, where numbers would not be charted against each other, and we could talk about the ways the budget/appropriations process is broken. But there simply isn't enough solid data to graph or visualize those numbers, since that lends them a visual credibility that they don't actually have.

The result is that I find budget day frustrating, even after leaving the newsroom, because it feels like a failure--something we should have been able to explain to our readers more fully, but couldn't quite grasp ourselves. Simultaneously, I often find coverage by other outlets annoying because they report on the budget as thought it's more meaningful than it actually will be, or they'll chart it across visualizations as though imaginary numbers could be compared to each other (there is an element of jealousy to this, no doubt: it must be nice to work in a place where you can get away with a little editorial sloppiness). It's a shame, because the budget itself is not broken. As an indication of what the White House thinks is important for the upcoming year, it's a great resource. But it is not a long-term financial plan, and shouldn't be reported as such.

February 5, 2012

UA All Day

While I've been waiting on my background check to clear for new day job, when I'm not teaching and/or working on lesson plans, and clearly in lieu of blogging, I've been working on improving the Urban Artistry web site. A lot of it has been rearranged and re-written, with the goal of making it punchier, with stronger calls to action on every page. It's also mobile-friendly, has more modern CSS, and removes about 6 years of accumulated detritus.

The other big initiative I've been working on for UA since the new year is this year's International Soul Society Festival. Last year's Soul Society page was a last-minute effort--we got the job done despite short deadlines and unreliable resources. This time, I wanted to fix some of the problems it had with print-oriented design, with mobile, and with being a high-maintenance, single-page site.

If you're in the DC area come late April, Soul Society is the place to be. Last year's festival had popping and b-boy battles, all-styles cyphers, great music and art, and some incredible judges' exhibitions. I'll be heading back to Virginia to attend myself. Hope to see you there!

January 18, 2012

Your Scattered Congresses

Once more with feeling: today, I'm happy to bring you my last CQ vote study interactive. This version is something special: although it lacks the fancy animations of its predecessor, it offers a full nine years of voting data, and it does so faster and in more detail. Previously, we had only offered data going back to 2009, or a separate interactive showing the Bush era composite scores.

We had talked about this three-pane presentation at CQ as far back as two years ago, in a discussion with the UX team on how they could work together with my multimedia team. Our goal was to lower the degree to which a user had to switch manually between views, and to visually reinforce what the scatter plot represents: a spatial view of party discipline. I think it does a pretty good job, although I do miss the pretty transitions between different graph types.

Technically speaking, loading nine years of votestudy data was a challenge: that's almost 5,000 scores to collect, organize, and display. The source files necessarily separate member biodata (name, district, party, etc) from the votestudy data, since putting the two into the same data structure would bloat the file size from repetition (many members served in multiple years). But keeping them separate causes a lag problem while interacting with the graphic: doing lookups based on XML queries tends to be very slow, particularly over 500K of XML.

I tried a few tricks to find a balance between real-time lookup (slow interaction, quick initial load) and a full preprocessing step (slow initial load, quick interactions). In the end, I went with an approach that processes each year when it's first displayed, adding biodata to the votestudy data structure at that time, and caching member IDs to minimize the lookup time on members who persist between years. The result is a slight lag when flipping between years or chambers for the first time, but it's not enough to be annoying and the startup time remains quick.

(In a funny side note, working with just the score data is obscenely quick. It's fast enough, in fact, that I can run through all nine years to find the bounds for the unity part of graph to keep it consistent from year to yearin less than a millisecond. That's fast enough that I can be lazy and do that before every re-render--as long as I don't need any names. Don't optimize prematurely, indeed.)

The resulting graphic is typical of CQ interactives, in that it's a direct view on our data without a strong editorial perspective--we don't try to hammer a story through here. That said, I think there's some interesting information that emerges when you can look at single years of data going back to 2002:

- The Senate is generally much more supportive of the president than the House is. While you can't directly compare scores across chambers (because the votes are different), the trend is striking. It's well known that House members tend to be more radical than senators, but I suspect the difference is also procedural: in the House, the leadership controls the agenda much more tightly than in the Senate, which can be held up by filibuster. As a result, the House may vote on bills that would never reach the Senate floor, just because the majority party can force the issue.

- Although the conventional wisdom on the left since the Gingrich years has been that Republican discipline is stronger for political reasons, I'm not sure that's entirely borne out by these graphics. Party unity over the last nine years appears roughly symmetrical most of the time, while presidential support (and opposition) appears to shift in direct response to the strength of the White House due to popularity and/or election status. 2007-2009 was a particularly strong time for the Democrats in terms of uniting around or against a presidential agenda, for obvious reasons. This year the Republicans rallied significantly, particularly in the House.

- There is one person who's explicitly taken out of the graphs (and not removed due to lack of participation or other technical reasons). That person is Zell Miller, everyone's favorite Bush-era iconoclast. If you're like me, you haven't thought about Zell Miller in 6 or 7 years, but there he was when I loaded the Senate file for the first time. Miller voted against his party so often that he had ridiculously low scores in 2003 and 2004, resulting in a vast expanse of white space on the plots with one lonely blue dot at the bottom. Rather than let him make everyone too small to click, I dropped him from the dataset as an outlier.

Finally, I did mention that this is my last CQ votestudy interactive. It's been a fantastic ride at Congressional Quarterly, and I'm grateful for the opportunities and education I received there. But it's time to move on, and to find something closer to home here in Seattle: at the end of this month, I'll be starting in a new position, doing web development at Big Fish Games. Wish me luck!

December 28, 2011

The Social Net Work

Moving all the way across the country, I'm finding social media invaluable for maintaining connections with my friends back in DC. It's no substitute for actually being there, but it's not supposed to be: instead, I get peeks into the life of my coworkers, the other members of Urban Artistry, and my other East Coast friends--just enough information that I still feel like I know how they're doing (and, hopefully, vice versa).

But which social media? I'm active on at least three, all of which I use (and compliment this blog) in different ways:

- Twitter typically takes the place of a linkblog. It's where I post quick, hit-and-run content that I find interesting, but not enough that I want to comment on it at length. I follow more people who don't know me on Twitter than I do anywhere else.

- Facebook is not my preferred social network, because they've shown again and again that given the option of maintaining my privacy or selling me out, they will reach for the check every single time, and they don't seem to understand why people get upset when they do. But all my friends are on Facebook, and therefore so am I.

- Somewhere in the middle is Google+. They've made missteps (no pseudonyms) but the basic idea is good. I like the way G+ is built for short-to-medium length writing: I don't want to write blog posts there, but it gives me enough room to tell a decent joke. Unfortunately, most people still haven't moved over, and there aren't any desktop clients for posting.

Of course, this is not a battle to the death. I don't have a problem picking the right place to post something (in fact, I kind of welcome the audience segmentation). But it's such a hassle, switching between windows or re-opening old pages to check for updates. What I really want is a single method of subscribing to updates from all of these services (and more) and optionally posting to them from a single box--what Warren Ellis calls One Big Thing Everywhere.

But that isn't something that the people developing social networks seem to be interested in facilitating. You can't get a decent RSS feed from any of these services anymore. And even building such a thing myself would be prohibitively difficult: Twitter's API is increasingly dense, Facebook's is notoriously hostile, and the G+ API is practically non-existent.

This is not a coincidence, of course, just as it's not a coincidence that Facebook has removed RSS as an input option and that Google recently integrated Reader directly into G+. My personal opinion is that these companies are working hard, both directly and indirectly, to kill decentralized syndication standards like RSS in favor of gateways that they can control.

All of which is the kind of thing that I shouldn't have to care about. But these days, as Lawrence Lessig observed in Code, we're writing our social and legal values into software. In this case, it's the software that helps me keep in touch with my friends and family back on the East coast. That's more than just inconvenient--it's a disturbing amount of power over our personal connections. Ironically, it's only when I use social networks the most that I seriously consider giving them up.

December 20, 2011

Console-less in Seattle

Belle and I thought our shipping containers full of all our worldly possessions would arrive from Virginia on December 8th. Turns out they hadn't left the East Coast. Now it's due Friday, we hope. Merry Christmas: we got ourselves all of our own stuff!

So in addition to missing our bed, our cooking utensils, and all our books, I've also been out of luck when it comes to console games this month. This is a funny reversal from the month where my laptop was out of commission. I like this better, though: PC gaming was where I started, and its independent development scene still puts together the most interesting titles anywhere, in my opinion. So I've been having fun knocking out some of my PC backlog, left over from Steam sales and random downloads. Here's a sampling:

Don't Take It Personally, Babe, It's Just Not Your Story has the longest title since the Dejobaan catalog, and if title length were an indicator of quality, it would be really good. Unfortunately, it's not. Where its predecessor, Digital: A Love Story was a mix of 1990 BBS hacking with a cute story to overcome its repetitive "hunt the phone number" mechanics, Don't Take It Personally is basically just one of those Japanese choose-your-own-adventure games, except with extra tedious high school drama. You click "next" a lot, is what I'm saying. The theme it's trying to present isn't nearly strong or coherent enough to overcome that.

I love Brendon Chung's Flotilla, which remains the weirdest--but most compelling--version of full-3D space combat I've ever been able to find, and it scratches a quick-play itch that I can't get from Sins of a Solar Empire. Since I was having a good time revisiting it, I went looking for Chung's other games and found Atom Zombie Smasher on Steam. A mix of tower defense, Risk, and randomly-generated RTS, AZS is one of those games where you think "this isn't that great," and then realize you've been playing until two in the morning. It also has a deceptively complicated learning curve.

One of the games I've had sitting around on Steam from an old sale was Far Cry (and its sequel, but I doubt my 2007-era Thinkpad will run Far Cry 2 very well). For some reason I seemed to have formed a lot of ideas about this game that weren't true: I thought it was an open-world shooter (it's not), I thought it would be dynamic like STALKER (definitely not), and I thought it was supposed to be a decent game (it's pretty boring). The one thing I'll say for it is that I do like the honest effort at making "jungle" terrain, instead of the typical "corridor shooter with tree textures," but that wasn't enough to keep me playing past the first third of the game.

Another holdover, one that fared much better, was Oddworld: Stranger's Wrath. It's not a comedy game per se, but it is often very funny: although it lacks the satirical edge and black humor of Oddysee and Exoddus, it retains their gift for writing hilariously dim-witted NPCs. It's also deeply focused on boss battles, which I kind of love (I felt the same way about No More Heroes, for similar reasons). The controls suffer a little on a PC (this is a game that really benefits from analog movement), and there are a couple of out-of-place difficulty spikes, but otherwise it was great to revisit the Oddworld.

I couldn't quite get behind indie adventure Trauma, unfortunately. It's a very PC title, part Myst and part Black and White, but it feels bloodless. Ostensibly the fever-dream of a photographer caught between life and death after a car wreck, the bland narration and ambient music never gives you any particular impulse to care about her, or reason to believe that she herself cares whether she lives or dies. An abrupt ending doesn't help. Trauma is arty, but there's no arc to it.

Speaking of indies, I finally beat the granduncle of the modern independent game, Cave Story. It's an impressive effort (especially given that there's an entire series of weapons and powerups in there that I completely skipped), but I'm not sure that I get all the love. The platforming is floaty--even at the end, there were a lot of jumps I would have missed without the jetpack--and the "experience" system seems to undermine the shooting (if you start to lose a fight, your weapons will downgrade, meaning you'll lose it faster). That said, it really does feel like a lost NES game, dug up and somehow dropped into Windows. The parts that are good--the music, the sprite art, and the Metroid-style progression--are all very good. But the parts that are frustrating, particularly a couple of incredibly frustrating checkpoints, are bad enough that I spent half the game on the edge of quitting in search of better entertainment.

Finally, I just started playing Bastion. The gorgeous texture work pushes my older video card, but I think it makes up for it by pushing a relatively low amount of geometry, so it plays pretty well. The clever narration gimmick is strong enough to make up for the fact that it's basically Diablo streamlined to the absolute minimum. I've never really been a fan of Diablo-style games--I don't care about the grind, and the controls feel strange to me--but we'll see if the story is enough to pull me through it.

December 9, 2011

Trapped in WebGL

As a web developer, it's easy to get the feeling that the browser makers are out to get you (the standards groups definitely are). The latest round of that sinking feeling comes from WebGL which is, as far as I can tell, completely insane. It's a product of the same kind of thinking that said "let's literally just make SQLite the web database standard," except that for some reason Mozilla is going along with it this time.

I started messing with WebGL because I'm a graphics programmer who never really learned OpenGL, and that always bothered me a little. And who knows? While I love Flash, the idea of hardware-accelerated 3D for data visualization was incredibly tempting. But WebGL is a disappointment on multiple levels: it's completely alien to JavaScript development, it's too unsafe to be implemented across browsers, and it's completely out of place as a browser API.

A Square Peg in a JavaScript-shaped Hole

OpenGL was designed as an API for C a couple of decades ago, and even despite constant development since then it still feels like it. Drawing even a simple shape in OpenGL ES 2.0 (the basis for WebGL) requires you to run some inscrutable setup functions on the GL context using bit flags, assemble a shader program from vertex and fragment shaders written in a completely different language (we'll get to this later), then pass in an undistinguished stream of vertex coordinates as a flat, 1D array of floating point numbers. If you want other information associated with those vertices, like color, you get to pass in another, entirely separate, flat array.

Does that sound like sane, object-oriented JavaScript? Not even close. Yet there's basically no abstraction from the C-based API when you write WebGL in JavaScript, which makes it an incredibly disorienting experience, because the two languages have fundamentally different design goals. Writing WebGL requires you to use the new ArrayBuffer types to pack your data into buffers for the GL context, and acquire "pointers" to your shader variables, then use getter and setter functions on those pointers to actually update values. It's confusing, and not much fun. Why can't we just pass in objects that represent the vertexes, with x, y, z, vertex color, and other properties? Why can't the GL context hand us an object with properties matching the varying, uniform, and attribute variables for the shaders? Would it kill the browser, in other words, to pick up even the slightest amount of slack?

Now, I know that API design is hard and probably nobody at Mozilla or Google had the time to code an abstraction layer, not to mention that they're all probably old SGI hackers who can write GL code in their sleep. But it cracks me up that normally browser vendors go out of their way to reinvent the wheel (WebSockets, anyone?), and yet in this case they just threw up their hands and plunked OpenGL into the browser without any attempt at impedance matching. It's especially galling when there are examples of 3D APIs that are intended for use by object-oriented languages: the elephant in the room is Direct3D, which has a slightly more sane vertex data format that would have been a much better match for an object-oriented scripting language. Oh, but that would mean admitting that Microsoft had a good idea. Which brings us to our second problem with WebGL.

Unsafe at Any Speed

Microsoft has come right out and said that they won't add WebGL to IE for security reasons. And although they've caught a lot of flack for this, the fact is that they're probably right. WebGL is based on the programmable pipeline version of OpenGL, meaning that web developers write and deliver code that is compiled and run directly on the graphics card to handle scene transformation and rendering. That's pretty low-level access to memory, being granted to arbitrary people on the Internet, with security only as strong as your video driver (a huge attack surface that has never been hardened against attack). And you thought Flash was a security risk?

The irony as I see it is that the problem of hostile WebGL shaders only exists in the first place because of my first point: namely, that the browsers using WebGL basically just added direct client bindings to canvas, including forcing developers to write, compile, and link shader programs. A 3D API that was designed to abstract OpenGL away from JavaScript developers could have emulated the old fixed-function pipeline through a set of built-in shaders, which would have been both more secure and dramatically easier for JavaScript coders to tackle.

Distraction via Abstraction

Most programming is a question of abstraction. I try to train my team members at a certain level to think about their coding as if they were writing an API for themselves: write small functions to encapsulate some piece of functionality, test them, then wrap them in slightly larger functions, test those, and repeat until you have a complete application. Programming languages themselves operate at a certain level of abstraction: JavaScript hides a number of operating details from the developer, such as the actual arrangement of memory or threads. That's part of what makes it great, because managing those things is often a huge pain that's irrelevant to making your web application work.

Ultimately, the problem of WebGL is one of proper abstraction level. I tend to agree with JavaScript developer Nicolas Zakas that a good browser API is mid-level: neither so low that the developer has to understand the actual implementation, nor so high that they make strong assumptions about usage patterns. I would argue that WebGL is too low--that it requires developers to effectively learn a C API in a language that's unsuited for writing C-style code, and that the result is a security and support quagmire.

In fact, I suspect that the reason WebGL seems so alien to me, even though I've written C and Java code that matched its style in the past, is that it's actually a lower-level API than the language hosting it. At the very minimum, a browser API should be aligned with the abstraction level of the browser scripting language. In my opinion, that means (at the very least) providing a fixed-function pipeline, using arrays of native JavaScript objects to represent vertex lists and their associated data, and providing methods for loading textures and data from standard image resources.

In Practice (or, this is why I'm still a Flash developer)

Let me give an example of why I find this entire situation frustrating--and why, in many ways, it's a microcosm of my feelings around developing for so-called "HTML5." Urban Artistry is working on updating our website, and one of the artistic directors suggested adding a spinning globe, with countries where we've done international classes or battles marked somehow. Without thinking too much I said sure, I could do that.

In Flash, this is a pretty straightforward assignment. Take a vector object, which the framework supports natively, color parts of it, then either project that onto the screen using a variation of raycasting, or actually load it as a texture for one of the Flash 3D engines, like PaperVision. All the pieces are right there for you, and the final size of the SWF file is probably about 100K, tops. But having a mobile-friendly site is a new and exciting idea for UA, so I thought it might be nice to see if it could be done without Flash.

In HTML, I discovered, fewer batteries come included. Loading a vector object is easy in browsers that support SVG--but for Android and IE, you need to emulate it with a JavaScript library. Then you need another library to emulate canvas in IE. Now if you want to use WebGL or 3D projection without tearing your hair out, you'd better load Three.js as well. And then you have to figure out how to get your recolored vector image over into texture data without tripping over the brower's incredibly paranoid security measures (hint: you probably can't). To sum up: now you've loaded about half a meg of JavaScript (plus vector files), all of which you have to debug in a series of different browsers, and which may not actually work anyway.

When faced with a situation like this, where a solution in much-hated Flash is orders of magnitude smaller and easier to code, it's hard to overstate just how much of a setback HTML development can be--and I say that as someone who has grown to like HTML development much more than he ever thought possible. The impression I consistently get is that neither the standards groups nor the browser vendors have actually studied the problems that developers like me commonly rely on plugins to solve. As a result, their solutions tend to be either underwhelming (canvas, the File APIs, new semantic elements) or wildly overcomplicated (WebGL, WebSQL, Web Sockets, pretty much anything with a Web in front of it).

And that's fine, I'll still work there. HTML applications are like democracy: they're the worst platform possible, except for most of the others. But every time I hear someone tell me that technologies like WebGL make plugins obsolete, my eyes roll so hard I can see my optic nerve. The replacements I'm being sold aren't anywhere near up to the tasks I need to perform: they're harder to use, offer me less functionality and lower compatibility, and require hefty downloads to work properly. Paranoid? Not if they're really out to get me, and the evidence looks pretty convincing.

December 6, 2011

Open House

Traveling in the modern age, no matter the distance, can be partially described as "the hunt for the next electrical outlet." The new stereo in our car has a USB port, which was a lifesaver, but each night played out similarly during our cross country trip: get into the hotel, let out the cat, comfort the dog, feed both, and then ransack every electrical socket in the place to recharge our phones and laptops.

I don't drive across the entire southern USA often, but I do spend a lot of time on public transit. So I've come to value devices that don't require daily charging, and avoid those that do. Until recently, I would have put my Kindle 2 in the former category, but over the last year, it has started discharging far too quickly--even after a battery replacement. There's clearly something wrong with it.

It's possible that the problem is simply three years of rough handling and being carried around in a messenger bag daily. Maybe something got bent a little bit more than the hardware could handle. I suspect that it's gotten stuck on some kind of automated process, indexing maybe, and as such it's not going to sleep properly. That has led to all kinds of voodoo trouble-shooting: deleting random books, disabling collections, even running a factory reset and reloading the whole thing. Has any of it worked? Um... maybe?

The thing is, I don't know. I don't know for certain what's wrong, or how to fix it, or if any of my ad-hoc attempts are doing any good. There's a huge void where knowledge should be, and it's driving me crazy. This is one of the few cases where I actually want to spend time providing my own tech support (instead of needlessly buying a whole new device)--and I can't. Because the Kindle, even though it's a Linux device under the hood, is an almost totally closed system. There's nowhere that I, or anyone else, can look for clues.

I was reminded of how much I've come to rely on open systems, not just by my Kindle worries, but by checking out some of the source code being used for Occupy Wall Street, particularly the OpenWRT Forum. That's a complete discussion forum written to run on a Linksys WRT wireless router--the same router I own, in fact. Just plug it into the wall, and instantly any wifi device within range can share a private space for discussion (handy, when you're not allowed to have amplified sound for meetings).

The fact that some enterprising coders can make a just-add-electricity collaboration portal out of off-the-shelf electronics owes everything to open source, albeit reluctantly. Linksys was forced, along with a lot of other router manufacturers, to release the source code for their firmware, since it was based on GPL code. That meant lots of people started messing with these boxes, in some cases to provide entirely new functionality, but in some cases just to fix bugs. That's how I ended up with one, actually: after we had a router crash repeatedly, I went looking for one that would have community support, as opposed to the original Linksys "release and forget" approach to customer service.

When something as faceless as a router can get new life, and clever new applications, through open source, it makes it all the more galling that I'm still having to play guessing games with my Kindle's battery issues. Indeed, it's frustrating that these "post-PC" closed systems are the way so many companies seem hellbent on pursuing: closed software running on disposable, all-in-one hardware. The result is virtually impossible to repair, at either the software or the physical level.

I'm not asking for open source everything--your microwave is safe. I'm not even necessarily arguing for strictly open source: I still run Windows at home, after all. I don't want to hack things for no good reason. What I think we deserve is open access, a backdoor into our own property. Enough of our lives are black boxes already. Ordinary people don't need to see it or use it, but if something goes wrong, the ability to at least look for an answer shouldn't be too much to ask.

December 1, 2011

The Long Way Home

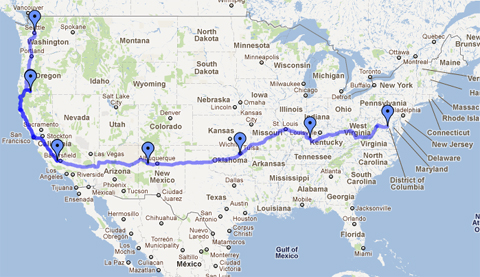

I'm writing from our new home, Seattle! We arrived at our new apartment yesterday, after a long, six-day road trip across the country with the dog and the cat in the back seat.

Turns out there's a lot of country between the two coasts. I wouldn't necessarily want to do it again, but it was neat to drive through parts of the United States that I'd never seen, like the deserts of New Mexico or the overwhelming flatness of Oklahoma, and to revisit a few, like my birthplace of Lexington, KY.

In any case, now we're just settling in, getting to know our new neighborhood, and waiting for the shipping containers to arrive with all our stuff. Regular blogging should return soon. Thanks for your patience!

November 18, 2011

Kindle Fire: Bullet Points

- If you strictly want to read e-books, you're better off with an e-ink Kindle. Reading on an LCD is still annoying, and the Kindle application is choppy.

- It's pretty great as a streaming media player, as long as you have a Netflix account or an Amazon Prime membership, or both. You can't cache either of those offline, though, which means it's not great away from wifi.

- The music player is fine, but I'm completely spoiled by Zune Pass, and I don't really need yet another device that plays MP3s.

- Belle doesn't like the speakers much. I think if we were meant to listen through speakers, God wouldn't have given us good noise-blocking headphones.

- I don't have any experience with other tablets, nor do I particularly think there's anything earth-shattering about the idea of a tablet, so I'm the wrong person to ask whether it's a good fit within that niche.

- That said, if you're looking for a cheap Android tablet, I'm not aware of anything better than the Fire for the price.

- As soon as someone releases a decent launcher that doesn't crash instantly, you will want to replace (supplement?) Amazon's weird launcher. "Cover Flow" is not a useful browsing UI when, like me, you own a couple hundred e-books.

- I'm enjoying the ability to sideload applications on the Fire a bit too much. It's like a flashback to the days when people used to install software from websites (or, heaven forbid, a disc from an actual store) instead of a centralized, curated, for-profit repository. "Oh, Amazon doesn't let other e-book readers or browsers in their store? Who cares?"